【技術解説】MR Tracking System for Live Show

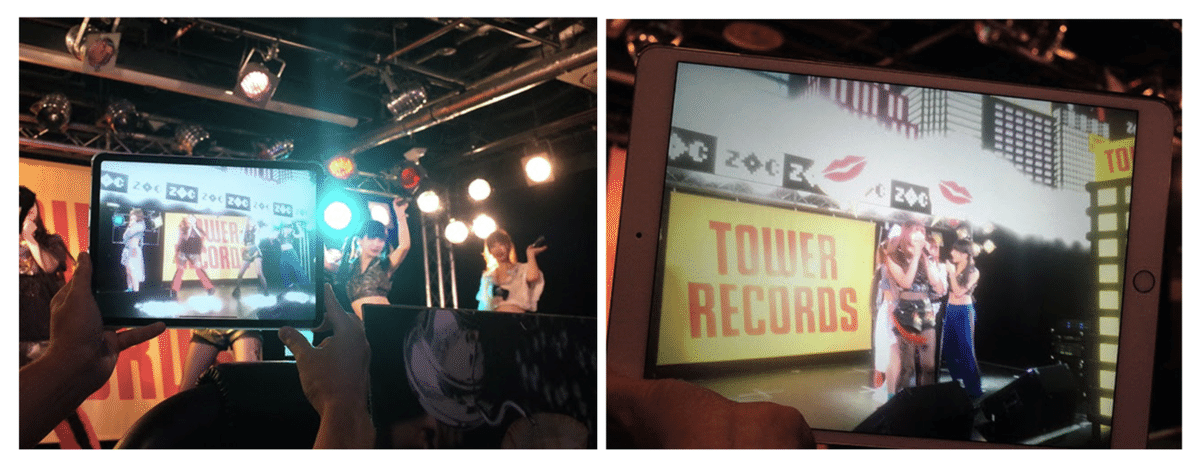

Hi, I’m Matt (@mechpil0t) at the Designium Tokyo Office. In 2019 I worked with Mao (@rainage) to prototype a system to add AR content to a live performance by the idol group ZOC.

Introduction

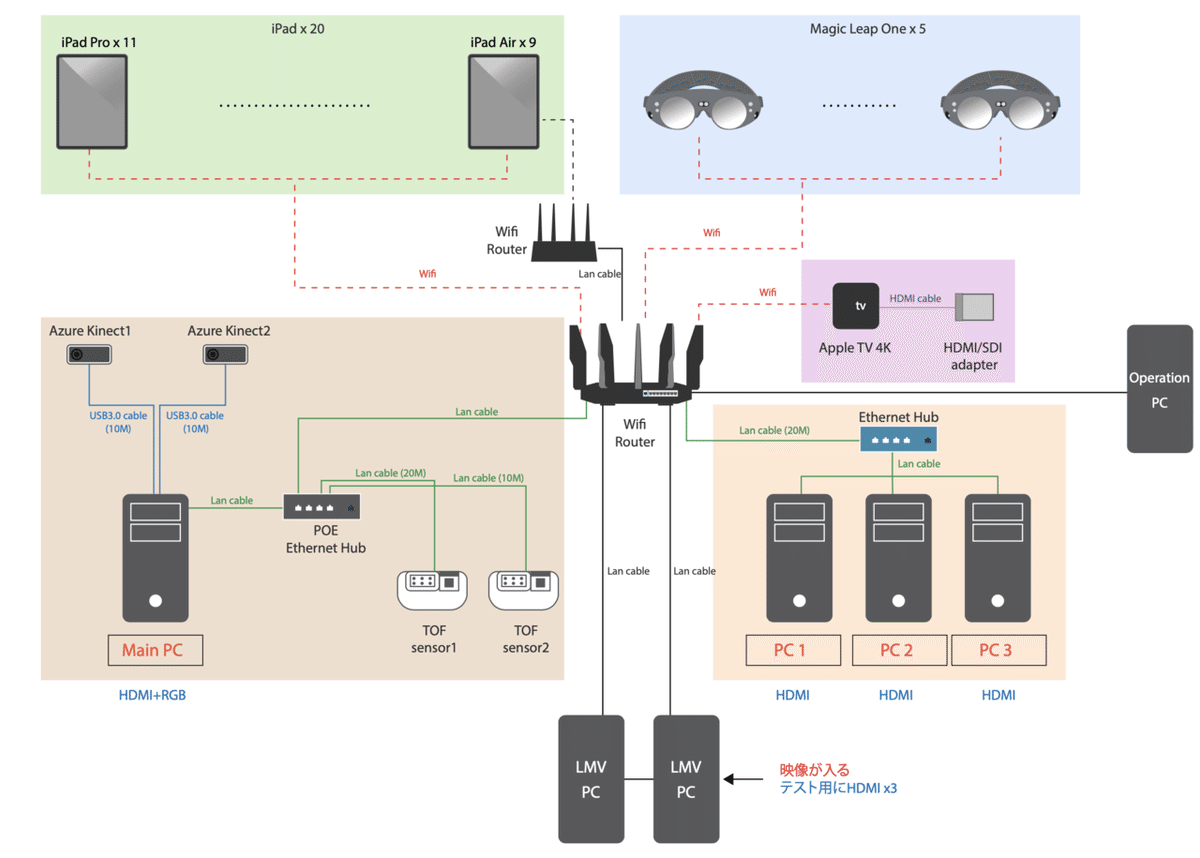

The main aim of the system was to track the positions of the performers and place/create AR content at those positions. The position of the performers were tracked using TOF sensors (discussed by Mao (@rainage)) and these positions were passed to a server application. The server application then passed these positions onto the client devices (Magic Leaps and iPads).

<tracking sever of the live show>

In this application there were three main types of AR content, environment, song synchronized, and positionally tracked.

(1) Static Content

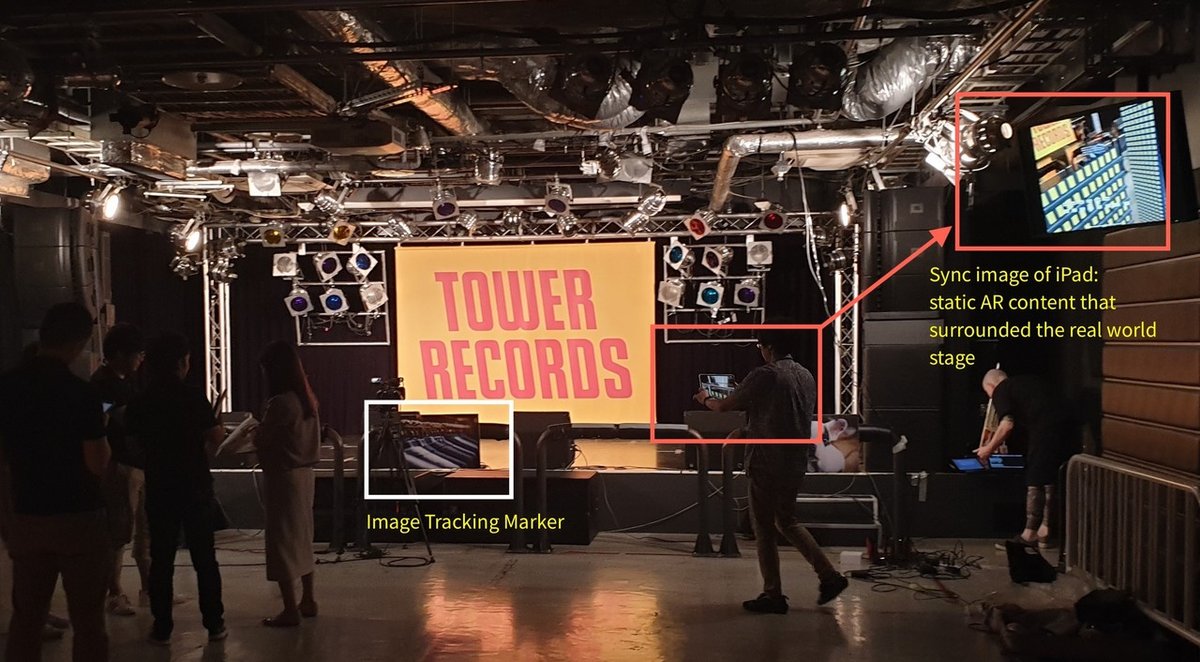

The static content was a cityscape that surrounded the stage and was placed using Image Tracking.

<static content>

The client devices used Image Tracking to place the static AR content. The center point of the static content was used to translate the Unity local positions sent from the server, into accurate real world positions.

(2) Song Synchronized

The song synchronized content was AR content that appeared (or animated) in time with the song performed by the idols. This was achieved by using a timer on the server application that would send trigger commands to the client applications at certain points throughout the song. The timer was started using a simple UDP trigger application used at the audio engineer position in the live house. When the song was started the trigger application sent a command to the server to start the song timer.

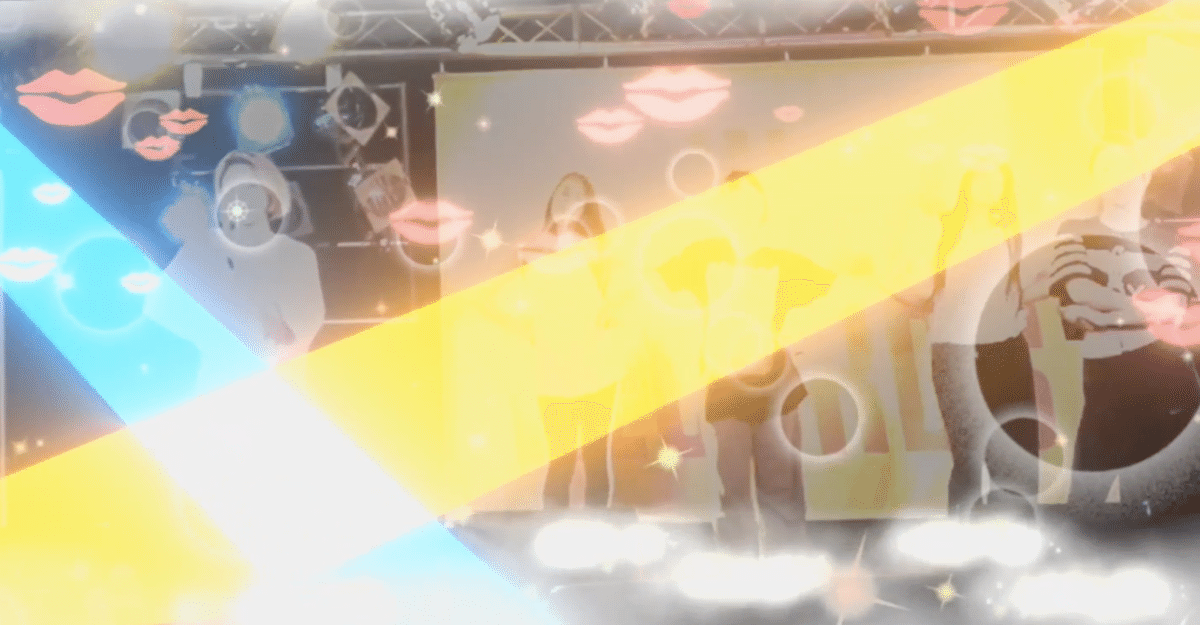

Song synchronized AR content included AR spotlights that would focus on idols singing a solo section of the song, and kiss marks when the idols would say “chuu”, and various other imagery linked to the vocals of the song.

(3) Positionally Tracked

Positionally tracked content was content that would appear at the positions of the performers. This was achieved by transforming the TOF positions into local Unity positions on a server, and then passing these positions onto the client devices using UDP over WiFi. This type of AR content would include clouds at the feet of the idols as shown in the image above.

In past projects, we used 2 Hitachi-LG TOF sensors to track up to 30 people (Bubble World 2019). However, in this case we had 3 big challenges: moving speed, pose detection, stage height.

[moving speed]

In past projects, we used the “People_Tracking” app of Hitachi-LG to track the positions of humans. It is easy to set up and stable. However, because this project required tracking the movement of people very accurately, the tracking position would have a delay of around 0.5~1 sec. Because the idols dance caused them to move very quickly, the delay of position would have made the MR experience very bad. Therefore we built our own tracking system using the Hitachi-LG SDK. With this, we could track positions almost in real time on the AR devices.

<AR+TOF tracking test>

[pose detection]

During the song, there were some sequences in which the idols would perform actions to play interactive games with the audience using AR devices. This required us to use Azure Kinect to track the skeletons of the idols. We made a new tracking system using TOF position information and binded Azure Kinect skeleton data to the TOF positions. Then we send out the user data (position, skeleton joints position & rotation, action state) to each client AR device.

<multi user tracking & gesture detection with TOF & Azure Kinect>

[stage height]

The stage height was a big challenge for our system. Due to the limitations on where we could place the sensors, there were some dead zones within the TOF sensor's FOV. To work around this we improved our skeleton tracking function. The main tracking position was still squired via the TOF position data. We then checked if there was a skeleton near this position, and if so then binded the skeleton onto this tracked position. If there is a skeleton position without TOF position close by, then set the skeleton would be used as the new tracking position. With this new tracking system, we solved the stage limitation and could then set the sensor up more flexibly.

The left side of this video shows our TOF tracking app. We set a red rectangle area of this testing as a dead zone (no TOF position area). Our TOF skeleton tracking system would be used for tracking positions if an idol entered this area.

Postscript

After this project, we began to use this sensing technology and AR concept in various other prototypes.

<control DMX moving light to follow user automatically>

<integrate NReal & DJI Livox Lidar>

The Designium.inc

・Interactive website

・Twitter

・Facebook

Editorial Note 編集後記

広報のマリコです!ライブに行くのが大好きで特にステージ演出が派手だったり凝っているものが好きな私は「ARコンテンツを楽しみながら参加できるなんて楽しそう✨」とついファン目線で読んでしまいました!もし最前列だったらどっちを見るか悩んじゃって大変かもしれないです笑

今回ZOCさんのステージということで、メンバーの戦慄かなのちゃんなどTVで見る子がいるのもテンションがあがりますした!(ミーハーですみません)最新テクノロジーによってライブの楽しみ方が色々と増えるのはファンにとって嬉しいですね😊

この記事が気に入ったらサポートをしてみませんか?