WSL2でllm.cを試してみる

「シンプルで純粋なC / CUDAのLLM学習。245MBのPyTorchや107MBのcPythonは必要ない!」らしいllm.cを試してみます。

使用するPCはドスパラさんの「GALLERIA UL9C-R49」。スペックは

・CPU: Intel® Core™ i9-13900HX Processor

・Mem: 64 GB

・GPU: NVIDIA® GeForce RTX™ 4090 Laptop GPU(16GB)

・OS: Ubuntu22.04 on WSL2(Windows 11)

です。

1. 準備

python3 -m venv llmc

cd $_

source bin/activateリポジトリをクローン。

git clone https://github.com/karpathy/llm.c

cd llm.cパッケージのインストール。

pip install -r requirements.txt データセットのダウンロードなど

スクリプトが用意されています。至れり尽くせり。

python prepro_tinyshakespeare.py実行結果を確認します。

$ ls -al data/

total 2424

drwxrwxr-x 2 user user 4096 Apr 14 20:34 .

drwxrwxr-x 6 user user 4096 Apr 14 20:34 ..

-rw-rw-r-- 1 user user 1115394 Apr 14 20:34 tiny_shakespeare.txt

-rw-rw-r-- 1 user user 1221040 Apr 14 20:34 tiny_shakespeare_train.bin

-rw-rw-r-- 1 user user 131072 Apr 14 20:34 tiny_shakespeare_val.bin

$2. 試してみる

READMEのとおりに試してみましょう。

(1) Cでロード可能なcheckpointを保存

OpenAI がリリースした GPT-2 の重みで初期化し、微調整を行います。GPT-2の重みをダウンロードし、Cでロードできるチェックポイントとして保存します。

$ python train_gpt2.py

using device: cuda

loading weights from pretrained gpt: gpt2

loading cached tokens in data/tiny_shakespeare_val.bin

wrote gpt2_124M.bin

wrote gpt2_124M_debug_state.bin

iteration 0, loss: 5.270007610321045, time: 997.153ms

iteration 1, loss: 4.059669017791748, time: 38.788ms

iteration 2, loss: 3.375048875808716, time: 34.864ms

iteration 3, loss: 2.8006813526153564, time: 34.975ms

iteration 4, loss: 2.3152999877929688, time: 34.938ms

iteration 5, loss: 1.8489323854446411, time: 35.194ms

iteration 6, loss: 1.3945465087890625, time: 34.934ms

iteration 7, loss: 0.9990097880363464, time: 35.637ms

iteration 8, loss: 0.6240332722663879, time: 38.020ms

iteration 9, loss: 0.3764905035495758, time: 34.568ms

final 20 iters avg: 131.907ms

<|endoftext|>One year ago today:

This is the first week since we last spoke.

---------------

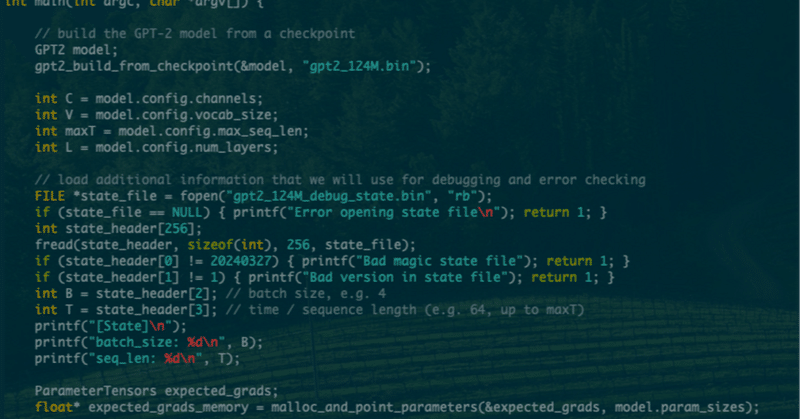

$このスクリプトは、GPT-2 (124M) モデルをダウンロードし、10 回のiterationし、3つのファイルを保存しています。

C でロードするための生のモデルの重みを含むファイル

より多くのデバッグ状態 (入力、ターゲット、ロジット、lossも含む

GPT-2トークナイザーの語彙を格納し、トークンIDをUTF-8でエンコードされた文字列部分のバイトシーケンスに変換

(2) コードのコンパイル

make train_gpt2コンパイルしたら、実行です。モデルの重みとトークンをロードし、数回のiterationのfine-tuningを実行し、モデルからサンプルを生成します。

$ OMP_NUM_THREADS=8 ./train_gpt2

[GPT-2]

max_seq_len: 1024

vocab_size: 50257

num_layers: 12

num_heads: 12

channels: 768

num_parameters: 124439808

train dataset num_batches: 1192

val dataset num_batches: 128

num_activations: 73323776

val loss 5.251911

step 0: train loss 5.356082 (took 5581.591249 ms)

step 1: train loss 4.300639 (took 5189.572946 ms)

step 2: train loss 4.623087 (took 5182.011041 ms)

step 3: train loss 4.599362 (took 5128.439433 ms)

step 4: train loss 4.616664 (took 5276.953107 ms)

step 5: train loss 4.231427 (took 5726.929921 ms)

step 6: train loss 3.753161 (took 5611.210707 ms)

step 7: train loss 3.650458 (took 5642.644096 ms)

step 8: train loss 4.182242 (took 5650.409601 ms)

step 9: train loss 4.199580 (took 5718.385683 ms)

val loss 4.426364

step 10: train loss 4.288661 (took 5646.155319 ms)

step 11: train loss 3.560642 (took 5626.001309 ms)

step 12: train loss 3.731437 (took 5607.757342 ms)

step 13: train loss 4.158511 (took 5598.457980 ms)

step 14: train loss 3.885633 (took 5545.257154 ms)

step 15: train loss 3.766486 (took 5628.031204 ms)

step 16: train loss 4.144007 (took 5537.410087 ms)

step 17: train loss 3.961167 (took 5419.470429 ms)

step 18: train loss 3.796044 (took 5448.091582 ms)

step 19: train loss 3.371042 (took 5784.110114 ms)

val loss 4.250554

generated: 50256 40 373 523 24776 351 534 1986 25 284 1282 290 996 484 561 407 466 340 597 517 621 355 198 5756 514 26 475 508 1683 460 1210 198 39276 257 995 523 1336 11 198 2504 612 1183 423 587 4844 286 674 1907 3653 475 198 1135 8770 12 50199 290 24447 1450 11 290 11240 606 15950 26

step 20: train loss 3.882789 (took 6262.029275 ms)

$生成されたもの(generated:の行)を変換します。

>>> import tiktoken

>>> enc = tiktoken.get_encoding("gpt2")

>>> ptok = lambda x: print(enc.decode(list(map(int, x.strip().split()))))

>>> ptok("50256 40 373 523 24776 351 534 1986 25 284 1282 290 996 484 561 407 466 340 597 517 621 355 198 5756 514 26 475 508 1683 460 1210 198 39276 257 995 523 1336 11 198 2504 612 1183 423 587 4844 286 674 1907 3653 475 198 1135 8770 12 50199 290 24447 1450 11 290 11240 606 15950 26")

<|endoftext|>I was so frightened with your face: to come and though they would not do it any more than as

Let us; but who ever can turn

Against a world so full,

That there'll have been none of our fightmen but

Weaver-bats and tearing men, and stir them utterly;

>>> をー、なにかが出力されました。ちゃんとした文字列ですね。

(3) C vs PyTorch

CコードがPyTorchコードと一致していることを確認するための簡単な単体テストが添付されています。

$ make test_gpt2

$ ./test_gpt2

[GPT-2]

max_seq_len: 1024

vocab_size: 50257

num_layers: 12

num_heads: 12

channels: 768

num_parameters: 124439808

[State]

batch_size: 4

seq_len: 64

num_activations: 73323776

-43.431606 -43.431751

-39.836342 -39.836464

-43.065918 -43.066036

OK (LOGITS)

LOSS OK: 5.269889 5.270008

dwte

OK -0.002320 -0.002320

OK 0.002072 0.002072

OK 0.003716 0.003717

OK 0.001307 0.001307

OK 0.000631 0.000632

TENSOR OK

dwpe

OK -0.005118 -0.005111

OK -0.000001 -0.000011

OK -0.003267 -0.003262

OK 0.009909 0.009912

OK 0.002155 0.002145

TENSOR OK

dln1w

OK -0.007520 -0.007524

OK 0.008624 0.008641

OK 0.005004 0.005028

OK -0.011098 -0.011095

OK -0.001667 -0.001665

TENSOR OK

dln1b

OK -0.038494 -0.038466

OK -0.030547 -0.030602

OK 0.010189 0.010223

OK 0.080135 0.080185

OK -0.060991 -0.060912

TENSOR OK

dqkvw

OK -0.000031 -0.000031

OK -0.000026 -0.000025

OK -0.000064 -0.000064

OK 0.000074 0.000074

OK 0.000020 0.000020

TENSOR OK

dqkvb

OK -0.000414 -0.000411

OK -0.000410 -0.000412

OK 0.000113 0.000113

OK -0.000564 -0.000565

OK 0.000574 0.000571

TENSOR OK

dattprojw

OK 0.000081 0.000080

OK -0.000005 -0.000005

OK -0.000019 -0.000019

OK 0.000005 0.000004

OK 0.000031 0.000031

TENSOR OK

dattprojb

OK 0.000456 0.000469

OK -0.009969 -0.009977

OK -0.001794 -0.001802

OK 0.037638 0.037595

OK -0.031288 -0.031243

TENSOR OK

dln2w

OK -0.018372 -0.018313

OK 0.004812 0.004814

OK 0.008084 0.008092

OK -0.001465 -0.001470

OK -0.002740 -0.002737

TENSOR NOT OK

dln2b

OK -0.026405 -0.026365

OK -0.016711 -0.016694

OK 0.001067 0.001081

OK 0.034754 0.034720

OK -0.028630 -0.028586

TENSOR OK

dfcw

OK 0.000438 0.000440

OK -0.000000 -0.000000

OK -0.000153 -0.000154

OK -0.000165 -0.000165

OK 0.000404 0.000405

TENSOR OK

dfcb

OK 0.003282 0.003291

OK 0.002038 0.002043

OK -0.001386 -0.001386

OK 0.000381 0.000386

OK 0.001602 0.001604

TENSOR OK

dfcprojw

OK 0.000678 0.000680

OK 0.000073 0.000073

OK -0.000415 -0.000416

OK -0.000059 -0.000061

OK -0.000603 -0.000603

TENSOR OK

dfcprojb

OK 0.003572 0.003582

OK -0.007148 -0.007157

OK -0.001955 -0.001963

OK 0.001466 0.001462

OK 0.001219 0.001215

TENSOR OK

dlnfw

OK -0.000022 -0.000022

OK 0.000811 0.000811

OK 0.001161 0.001161

OK -0.002956 -0.002957

OK 0.001146 0.001145

TENSOR OK

dlnfb

OK -0.011101 -0.011101

OK 0.008007 0.008007

OK -0.004763 -0.004768

OK -0.002110 -0.002113

OK -0.005903 -0.005905

TENSOR OK

step 0: loss 5.269889 (took 2562.609800 ms)

step 1: loss 4.059388 (took 2359.643976 ms)

step 2: loss 3.374211 (took 2259.207183 ms)

step 3: loss 2.800126 (took 2212.092138 ms)

step 4: loss 2.315311 (took 2260.666996 ms)

step 5: loss 1.849347 (took 2211.245700 ms)

step 6: loss 1.395217 (took 2182.584733 ms)

step 7: loss 0.998615 (took 2225.094295 ms)

step 8: loss 0.625540 (took 2193.447909 ms)

step 9: loss 0.378012 (took 2212.115856 ms)

loss ok at step 0: 5.269889 5.270007

loss ok at step 1: 4.059388 4.059707

loss ok at step 2: 3.374211 3.375123

loss ok at step 3: 2.800126 2.800783

loss ok at step 4: 2.315311 2.315382

loss ok at step 5: 1.849347 1.849029

loss ok at step 6: 1.395217 1.394656

loss ok at step 7: 0.998615 0.999147

loss ok at step 8: 0.625540 0.624080

loss ok at step 9: 0.378012 0.376511

overall okay: 0TENSOR OKが連発しているので、OKのようです。はい。

(4) CUDAで試してみる

test_gpt2cu がCUDA対応のコードですのでmakeします。

$ make test_gpt2cu

$ ./test_gpt2cuだかしかし。CUDAエラー(2024/4/14当時)。

[CUDA ERROR] at file train_gpt2.cu:1238:

an illegal memory access was encountered現在は、git pullして最新のコードにすれば問題なく動きます。

$ CUDA_VISIBLE_DEVICES=0 ./train_gpt2cu

[System]

Device 0: NVIDIA GeForce RTX 4090

enable_tf32: 1

[GPT-2]

max_seq_len: 1024

vocab_size: 50257

num_layers: 12

num_heads: 12

channels: 768

num_parameters: 124439808

train dataset num_batches: 74

val dataset num_batches: 8

batch size: 4

sequence length: 1024

val_num_batches: 10

---

WARNING: Failed to open the tokenizer file gpt2_tokenizer.bin

The Tokenizer is a new feature added April 14 2024.

Re-run `python train_gpt2.py` to write it

---

num_activations: 2456637440

val loss 4.517179

step 0: train loss 4.367631 (took 30.767757 ms)

step 1: train loss 4.406341 (took 30.877528 ms)

step 2: train loss 4.484756 (took 30.782113 ms)

step 3: train loss 4.345182 (took 30.602100 ms)

step 4: train loss 4.043440 (took 30.744220 ms)

step 5: train loss 4.229531 (took 30.774699 ms)

step 6: train loss 4.175078 (took 30.784364 ms)

step 7: train loss 4.207684 (took 30.744821 ms)

step 8: train loss 4.127494 (took 30.860593 ms)

step 9: train loss 4.220500 (took 30.790760 ms)

val loss 4.517179

step 10: train loss 4.345251 (took 30.998719 ms)

step 11: train loss 4.245913 (took 30.892223 ms)

step 12: train loss 4.160710 (took 30.844507 ms)

step 13: train loss 3.989707 (took 30.925669 ms)

step 14: train loss 4.305970 (took 30.803195 ms)

step 15: train loss 4.340496 (took 30.965996 ms)

step 16: train loss 4.304414 (took 30.889718 ms)

step 17: train loss 4.424054 (took 30.937060 ms)

step 18: train loss 4.314544 (took 30.924211 ms)

step 19: train loss 4.287184 (took 30.944278 ms)

val loss 4.517179

generating:

---

51 615 396 735 5451 198 198 1890 616 4165 3640 286 16713 1435 11 314 423 9713 262 27876 286 257 6428 620 578 6164 290 6496 262 7022 329 28273 66 2118 5043 13 198 198 2953 616 12641 4854 314 6693 262 15013 4203 314 1998 351 281 24822 1029 44268 3265 960 1795 4 286 2153 2611 43939 423

---

step 20: train loss 4.296932 (took 30.765803 ms)

step 21: train loss 4.238368 (took 30.924571 ms)

step 22: train loss 4.235001 (took 31.028362 ms)

step 23: train loss 4.037789 (took 30.785344 ms)

step 24: train loss 4.309966 (took 31.156228 ms)

step 25: train loss 4.451100 (took 31.077471 ms)

step 26: train loss 4.406357 (took 31.181847 ms)

step 27: train loss 4.334877 (took 30.910989 ms)

step 28: train loss 4.368477 (took 30.839896 ms)

step 29: train loss 4.287300 (took 30.965366 ms)

val loss 4.517179

step 30: train loss 4.155085 (took 30.867384 ms)

step 31: train loss 4.116601 (took 30.879440 ms)

step 32: train loss 4.182609 (took 30.825194 ms)

step 33: train loss 4.389328 (took 31.021108 ms)

step 34: train loss 4.254278 (took 30.874582 ms)

step 35: train loss 4.252576 (took 30.915915 ms)

step 36: train loss 4.323340 (took 30.867000 ms)

step 37: train loss 4.266943 (took 30.801092 ms)

step 38: train loss 4.267965 (took 30.945505 ms)

step 39: train loss 4.062835 (took 30.831848 ms)

val loss 4.517179

generating:

---

18932 5689 46861 11 257 10033 286 36486 1605 10540 11 644 339 1807 286 852 25880 393 16499 257 1728 835 734 812 2084 11 339 28271 13 198 198 1 40 836 470 760 553 5267 624 8712 13 366 28172 4206 546 326 13 314 766 883 1243 13 632 338 407 1107 257 1263 5490 379 262 2589 13

---

step 40: train loss 4.386545 (took 30.788454 ms)

$CUDAを使うとちょー速いですね。

4. まとめ

コードを眺めているとmalloc関数呼び出した後にNULLチェックしていないとか気になる箇所があったりしますが、とても楽しみなプロダクトです。

この記事が気に入ったらサポートをしてみませんか?