超簡単Pythonで株価予測(keras・RNN 利用)ディープラーニング

Pythonでkerasを利用して翌日の株価の上下予測を超簡単にディープラーニング(RNN使用)

1. ツールインストール

$ pip install scikit-learn keras pandas-datareader2. ファイル作成

pred.py

import numpy as np

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from keras import Sequential

from keras.layers import Dense, SimpleRNN

import pandas_datareader as pdr

from sklearn.metrics import accuracy_score

df = pdr.get_data_yahoo("AAPL", "2010-11-01", "2020-11-01")

df["Diff"] = df.Close.diff()

df["SMA_2"] = df.Close.rolling(2).mean()

df["Force_Index"] = df.Close * df.Volume

df["y"] = df["Diff"].apply(lambda x: 1 if x > 0 else 0).shift(-1)

df = df.drop(

["Open", "High", "Low", "Close", "Volume", "Diff", "Adj Close"],

axis=1,

).dropna()

# print(df)

X = StandardScaler().fit_transform(df.drop(["y"], axis=1))

y = df["y"].values

X_train, X_test, y_train, y_test = train_test_split(

X,

y,

test_size=0.2,

shuffle=False,

)

model = Sequential()

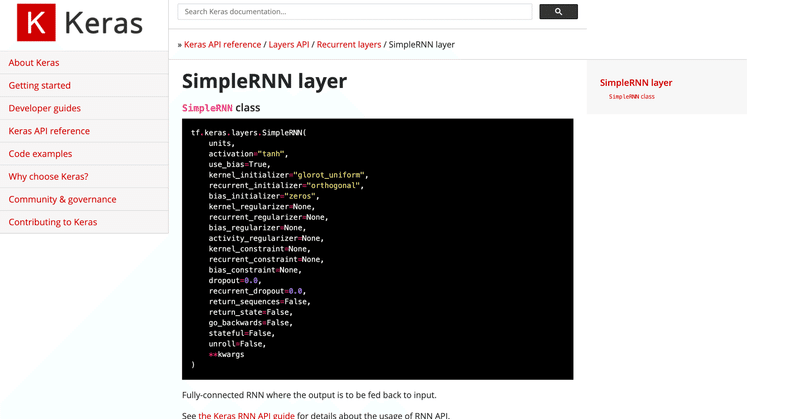

model.add(SimpleRNN(2, input_shape=(X_train.shape[1], 1)))

model.add(Dense(1, activation="sigmoid"))

model.compile(optimizer="adam", loss="binary_crossentropy", metrics=["acc"])

model.fit(X_train[:, :, np.newaxis], y_train, epochs=100)

y_pred = model.predict(X_test[:, :, np.newaxis])

print(accuracy_score(y_test, y_pred > 0.5))3. 実行

$ python pred.py

Epoch 1/100

63/63 [==============================] - 0s 1ms/step - loss: 0.6959 - acc: 0.4950

Epoch 2/100

63/63 [==============================] - 0s 2ms/step - loss: 0.6947 - acc: 0.4980

:

Epoch 99/100

63/63 [==============================] - 0s 1ms/step - loss: 0.6920 - acc: 0.5224

Epoch 100/100

63/63 [==============================] - 0s 1ms/step - loss: 0.6921 - acc: 0.5194

0.5376984126984127以上、超簡単!

4. 結果

同じデータ、特徴量で、計算した結果、XGBoost・DNN・LSTM・GRU・RNN・LogisticRegression・k-nearest neighbor・RandomForest・BernoulliNB・SVM・RGF・MLP・Bagging・Voting・Stacking・LightGBM・TCN・HGBCのうちMLPが最も良いという事に

XGBoost 0.5119047619047619

DNN 0.5496031746031746

LSTM 0.5178571428571429

GRU 0.5138888888888888

RNN 0.5376984126984127

LogisticRegression 0.5496031746031746

k-nearest neighbor 0.5198412698412699

RandomForest 0.49603174603174605

BernoulliNB 0.5496031746031746

SVM 0.5396825396825397

RGF 0.5158730158730159

MLP 0.5694444444444444

Bagging 0.5297619047619048

Voting 0.5416666666666666

Stacking 0.5218253968253969

LightGBM 0.5456349206349206

TCN 0.5198412698412699

HGBC 0.55. 参考

この記事が気に入ったらサポートをしてみませんか?