コピー機学習法による影LoRA作成

こんにちは、コピー機LoRAにドはまりしているとりにくです。

先日相互フォロワーである抹茶もなかさんがこんなつぶやきをしていたのが気になって実践してみました。

コピー機LoRAそれこそ1影だけ付けるとか光効果だけつけるとかを少ないデータセットでできそう

— 抹茶もなか (@GianMattya) December 12, 2023

使用データ

作業ファイルご案内ですーhttps://t.co/BIozXrw91N

— ももろみ(ロミナ毅流/桃屋聖)日:東エ39a (@tmh_red) December 15, 2023

観られて困るファイルでもないのでオープンでお渡ししてしまいます

敢えてのグリザイユアニメ塗り+α仕上げとなっております

他の版も必要でしたら加工しますのでお申し付けくださいー

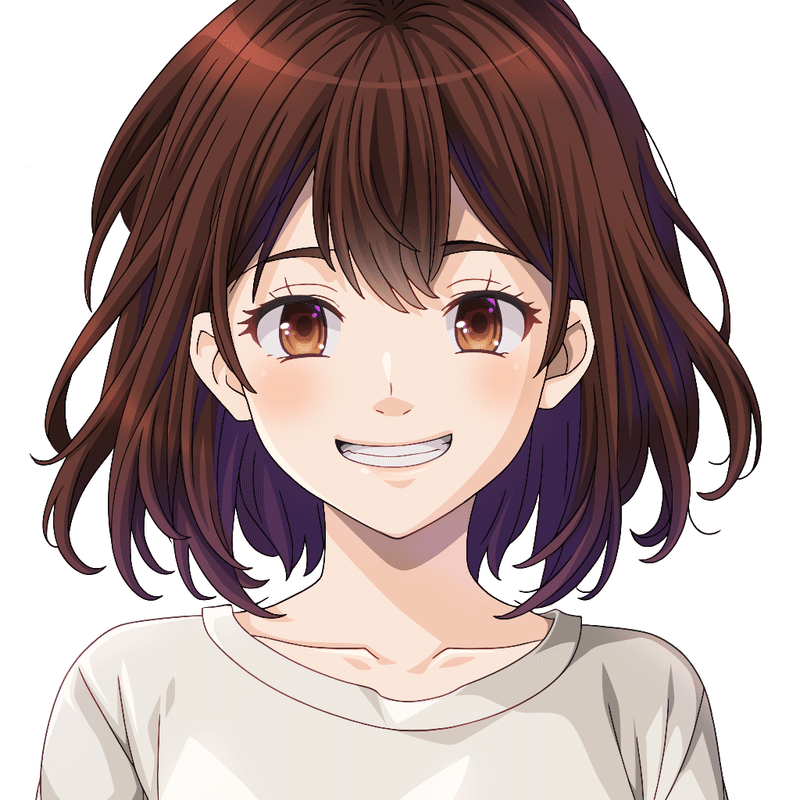

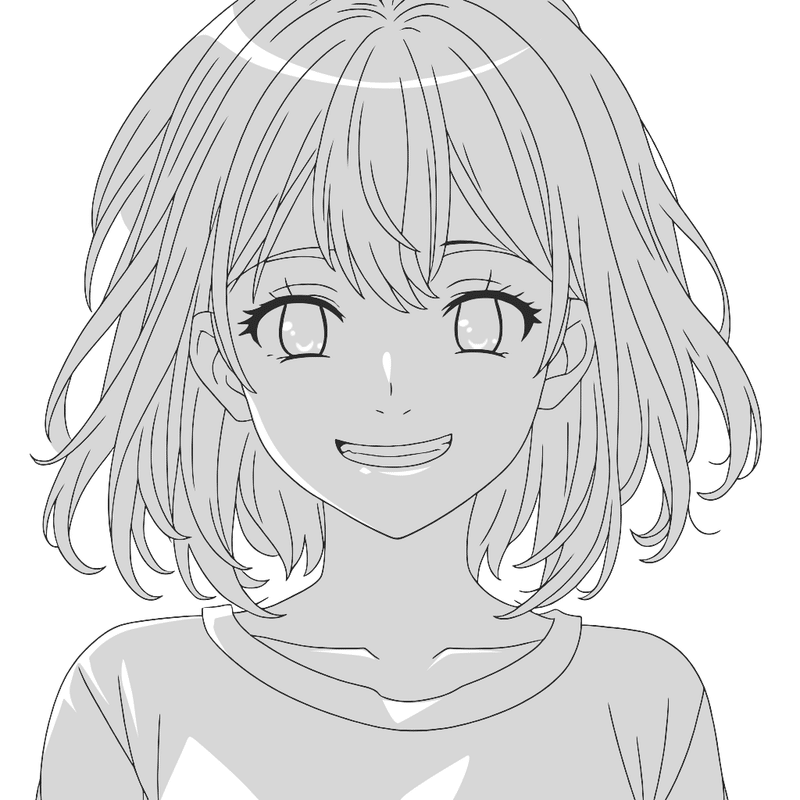

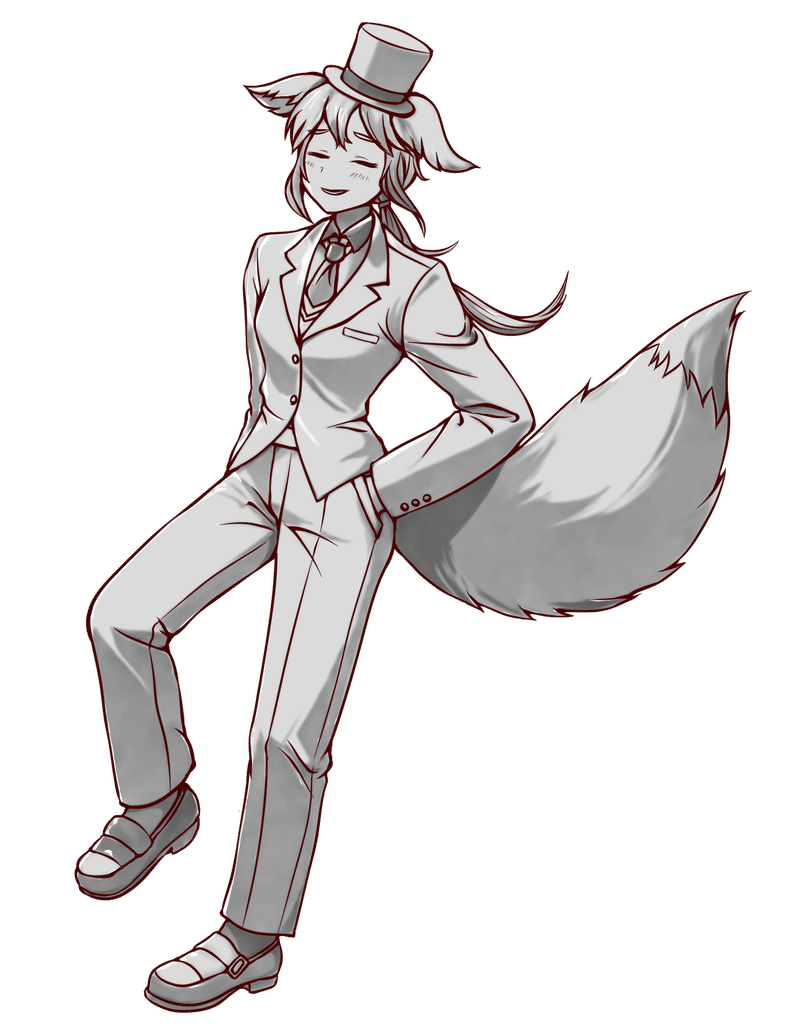

上記をベースにこんな感じの画像を作ってみました。

ももろみさん画像

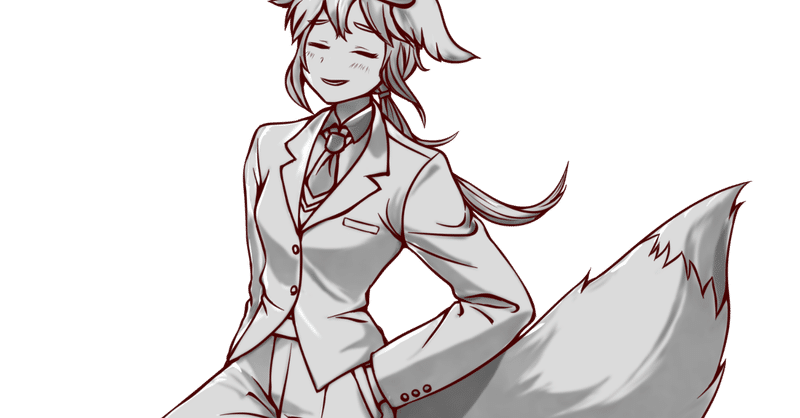

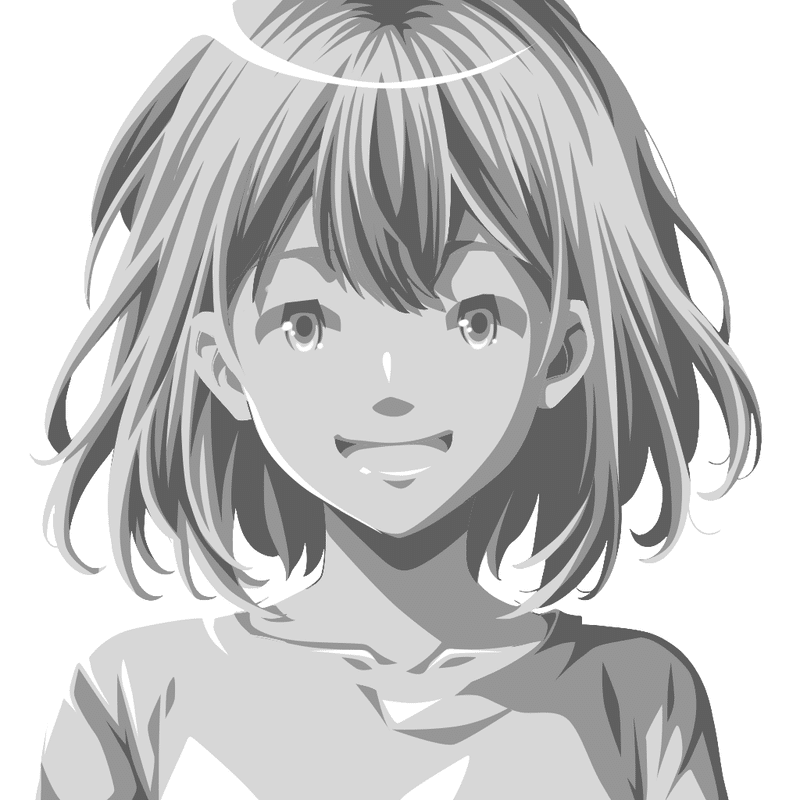

検証に使った線画

検証

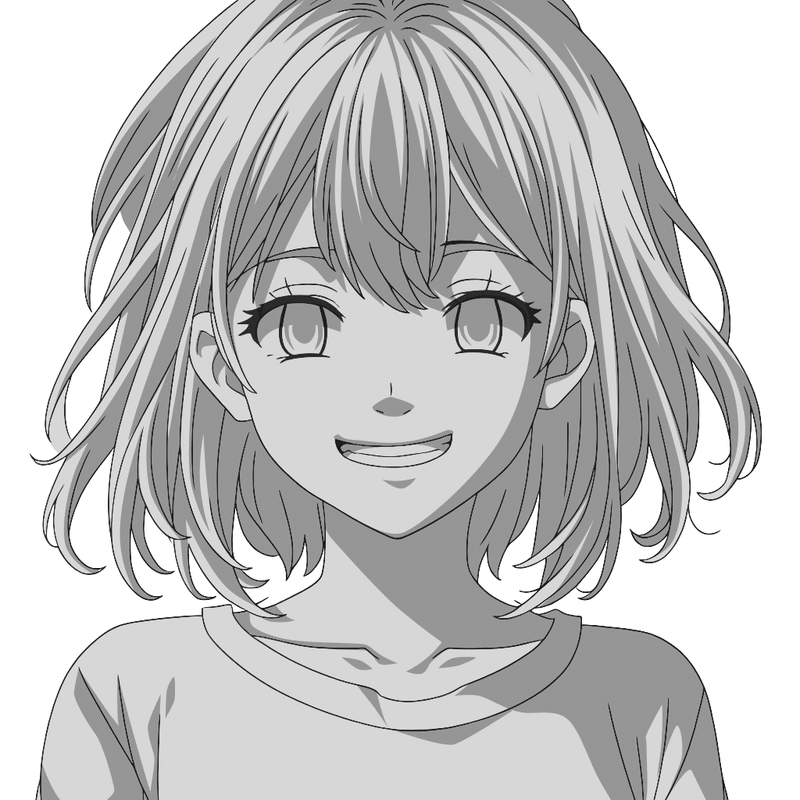

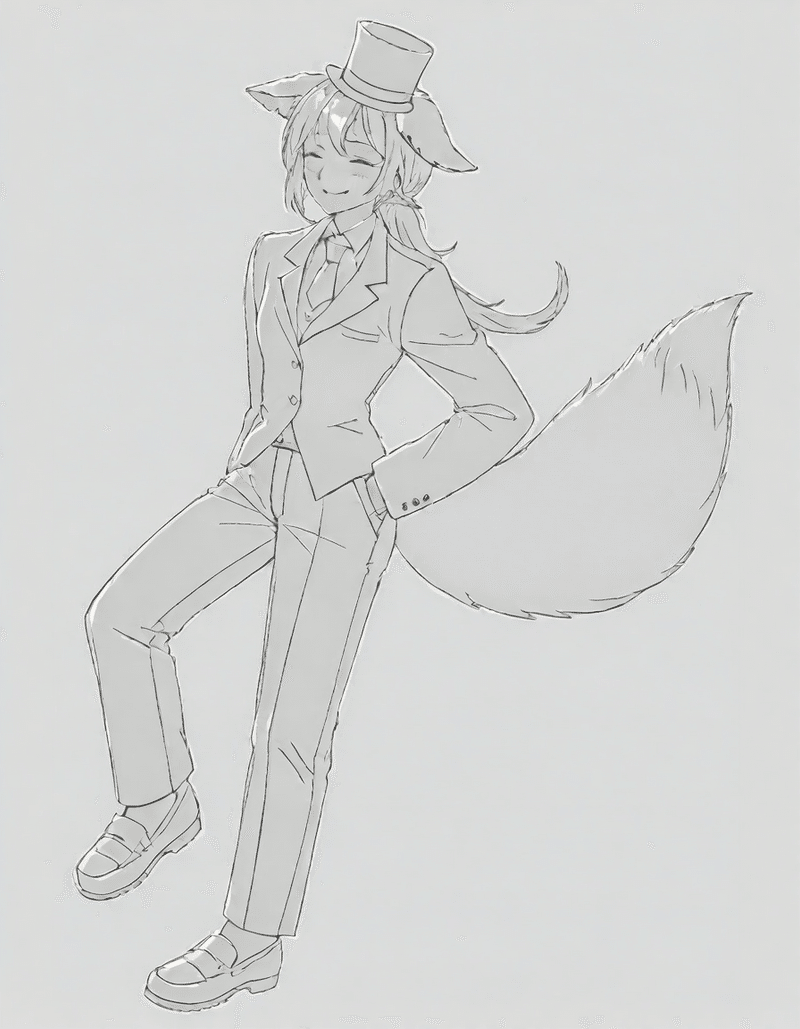

SD1.5

prompt:

monochrome, greyscale, animal ears, solo, tail, 1girl, necktie, closed eyes, pants, hat, shirt, footwear, pants, full body, white background, shoes, jacket, headwear, smile, simple background, hand in pocket, jacket, long hair, necktie, collared shirt, fox tail, loafers, long sleeves, low ponytail, fox girl, top hat, ponytail, vest, closed mouth, fox ears, formal, suit, official alternate costume, vest, bangs

Negative prompt: EasyNegative

Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 1404191844, Size: 448x576, Model hash: 1749adc5b4, Model: SDHK04, VAE hash: 538255c0d5, VAE: kl-f8-anime2.ckpt, ControlNet 0: "Module: lineart_standard (from white bg & black line), Model: control_v11p_sd15_lineart [43d4be0d], Weight: 1, Resize Mode: Crop and Resize, Low Vram: False, Processor Res: 512, Guidance Start: 0, Guidance End: 1, Pixel Perfect: True, Control Mode: Balanced, Save Detected Map: True", Lora hashes: "1kage: 0c917d990d51", TI hashes: "EasyNegative: c74b4e810b03", Version: v1.6.0-2-g4afaaf8a

う、うーーーん!悪くはないんですけど解像度がたりず細かいところがつぶれてしまいます。ここら辺はSD1.5の仕様なので仕方ない気がします。

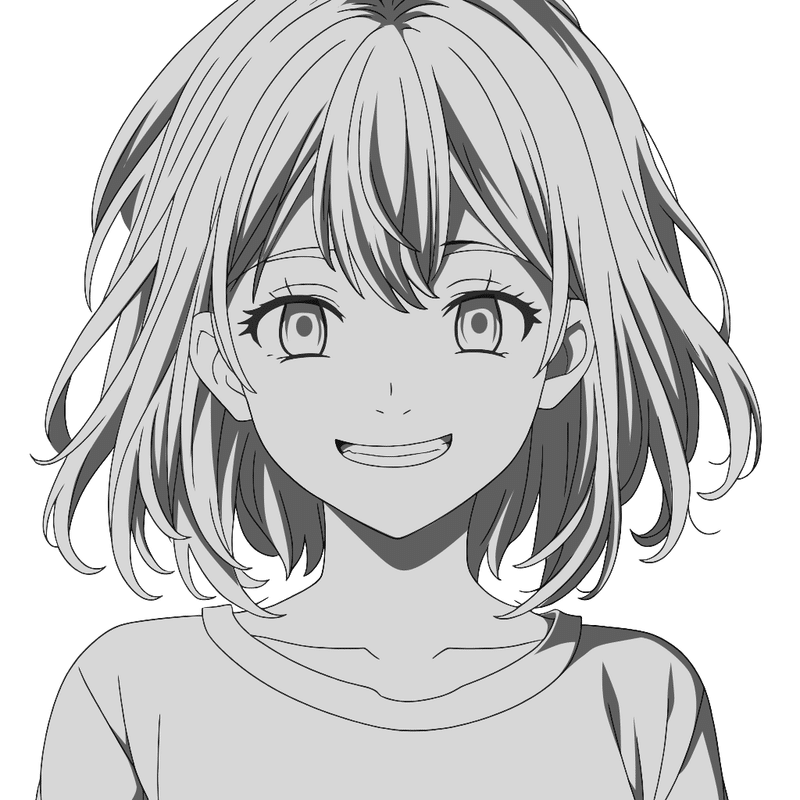

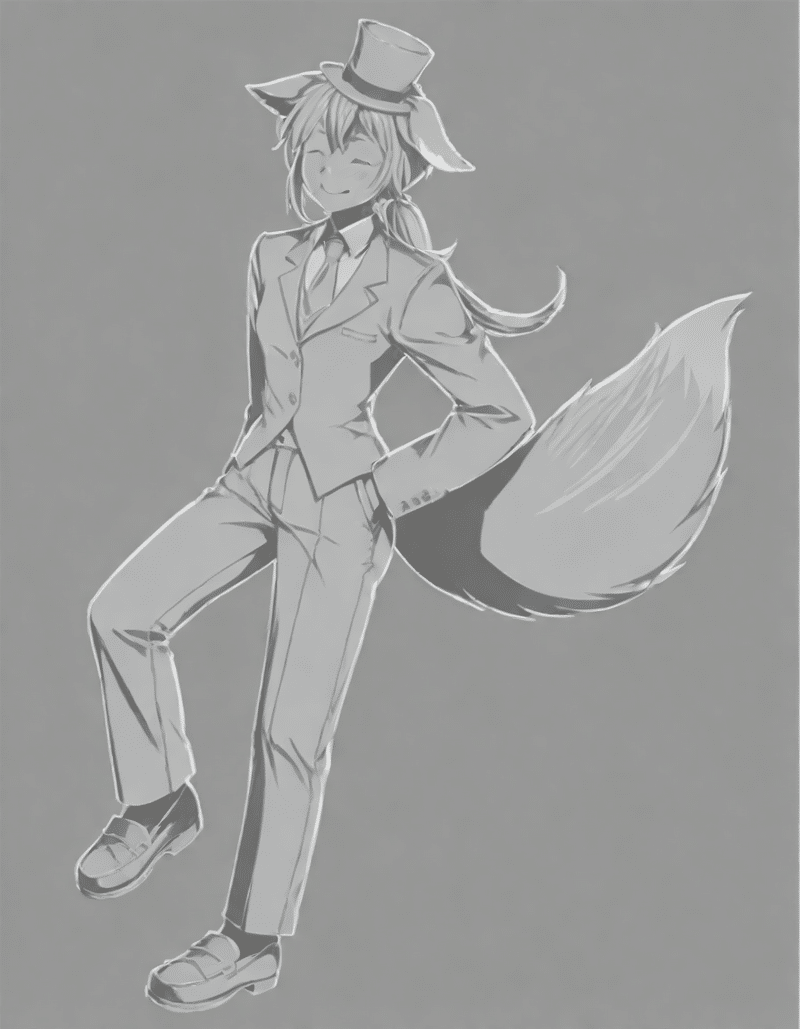

SDXL

prompt:

monochrome, greyscale, animal ears, solo, tail, 1girl, necktie, closed eyes, pants, hat, shirt, footwear, pants, full body, white background, shoes, jacket, headwear, smile, simple background, hand in pocket, jacket, long hair, necktie, collared shirt, fox tail, loafers, long sleeves, low ponytail, fox girl, top hat, ponytail, vest, closed mouth, fox ears, formal, suit, official alternate costume, bangs SimplepositiveXLv1

Negative prompt:

negativeXL_D sdxl-negprompt8-v1m unaestheticXL_AYv1

Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 2883886449, Size: 896x1152, Model hash: 9025c79626, Model: CrazyXL, ControlNet 0: "Module: canny, Model: sai_xl_canny_256lora [566f20af], Weight: 1, Resize Mode: Crop and Resize, Low Vram: False, Processor Res: 512, Threshold A: 100, Threshold B: 200, Guidance Start: 0, Guidance End: 1, Pixel Perfect: True, Control Mode: Balanced, Save Detected Map: True", Lora hashes: "SDXL_kage: bc929267287d", TI hashes: "SimplepositiveXLv1: 049fb42b64c9, SimplepositiveXLv1: 049fb42b64c9, negativeXL_D: fff5d51ab655, sdxl-negprompt8-v1m: 24350b43a034, unaestheticXL_AYv1: 8a94b6725117, negativeXL_D: fff5d51ab655, sdxl-negprompt8-v1m: 24350b43a034, unaestheticXL_AYv1: 8a94b6725117", Version: v1.6.0-2-g4afaaf8a

上記の結果をそのまま使うのは難しそうだったので、ひたすら生成AIの出力を加筆&修正してこんな感じになりました。

簡単レシピ

1つのLoRAを学習するのに、SD1.5は30分、SDXLは70分かかる。

git clone -b sdxl https://github.com/kohya-ss/sd-scripts.git

cd sd-scripts

python -m venv venv

.\venv\Scripts\activate

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117

pip install -U xformers

pip install --upgrade -r requirements.txt

python -m pip install bitsandbytes==0.41.1 --prefer-binary --extra-index-url=https://jllllll.github.io/bitsandbytes-windows-webui

accelerate config

- This machine

- No distributed training

- NO

- NO

- NO

- all

- fp16フォルダ名は適当(繰り返し数は適当に10000とかでOK、step数指定なので)

1024,768,512にそれぞれリサイズして保存。キャプションは適当につけておく。

copi-ki.toml(GPUによってtrain_batch_sizeは変えること)

pretrained_model_name_or_path = "C:/stable-diffusion-webui/models/Stable-diffusion/SDHK04.safetensors"

network_module = "networks.lora"

xformers = true

persistent_data_loader_workers = true

max_data_loader_n_workers = 12

enable_bucket = true

save_model_as = "safetensors"

lr_scheduler_num_cycles = 4

mixed_precision = "fp16"

learning_rate = 0.0001

resolution = "512,512"

train_batch_size = 12

network_dim = 128

network_alpha = 128

optimizer_type = "AdamW8bit"

bucket_no_upscale = true

clip_skip = 2

save_precision = "fp16"

lr_scheduler = "linear"

min_bucket_reso = 64

max_bucket_reso = 1024

caption_extension = ".txt"

seed = 42

network_train_unet_only = truecopi-ki_SDXL.toml(GPUによってtrain_batch_sizeは変えること)

pretrained_model_name_or_path = "C:/stable-diffusion-webui/models/Stable-diffusion/blue_pencil-XL-v2.0.0.safetensors"

network_module = "networks.lora"

xformers = true

gradient_checkpointing = true

persistent_data_loader_workers = true

max_data_loader_n_workers = 12

enable_bucket = true

save_model_as = "safetensors"

lr_scheduler_num_cycles = 4

mixed_precision = "fp16"

learning_rate = 0.0001

resolution = "512,512"

train_batch_size = 12

network_dim = 64

network_alpha =64

optimizer_type = "AdamW8bit"

bucket_no_upscale = true

save_precision = "fp16"

lr_scheduler = "linear"

min_bucket_reso = 64

max_bucket_reso = 1024

caption_extension = ".txt"

seed = 42

network_train_unet_only = true

no_half_vae = trueコマンド一覧

#SD1.5学習

accelerate launch ^

--num_cpu_threads_per_process 12 ^

train_network.py ^

--config_file="C:\sd-scripts\user_config\1kage\copi-ki.toml" ^

--train_data_dir="C:\sd-scripts\user_config\1kage\base" ^

--output_dir="C:\sd-scripts\user_config\output\1kage" ^

--output_name=copi-ki-base ^

--max_train_steps 1500

#SDXL学習

accelerate launch ^

--num_cpu_threads_per_process 12 ^

sdxl_train_network.py ^

--config_file="C:\sd-scripts\user_config\1kage\copi-ki_SDXL" ^

--train_data_dir="C:\sd-scripts\user_config\1kage\HL" ^

--output_dir="C:\sd-scripts\user_config\output\1kage" ^

--output_name=SDXL_copi-ki-HL ^

--max_train_steps 1500

#絵柄の差分抽出

python .\networks\merge_lora.py ^

--save_to "C:\sd-scripts\user_config\output\style\〇〇.safetensors" ^

--models "C:\sd-scripts\user_config\output\style\copi-ki-〇〇.safetensors" "C:\sd-scripts\user_config\output\style\copi-ki-base.safetensors" ^

--ratios 1.4 -1.4 ^

--concat ^

--shuffle ^

--save_precision fp16

#LoRAのリサイズ

python .\networks\svd_merge_lora.pyy ^

--save_to "C:\sd-scripts\user_config\output\style\〇〇_128.safetensors" ^

--models "C:\sd-scripts\user_config\output\style\〇〇.safetensors"

--ratios 1

--new_rank 128

--device cuda

--save_precision fp16この記事が気に入ったらサポートをしてみませんか?