日本語をAIの呪文に変換するLLMシステムの開発

最近全くローカルLLMを触っていなかったのですが、Gemma2-9B系がすごいらしいぞ!という噂を聞いて興味を持ったのでチャレンジしてみました。

環境構築

Ollamaの使用を勧められましたが、将来的にはビルドできるアプリにしたかったので、llama-cpp-pythonで環境構築しました。

CUDA12.1導入済み前提です。

python -m venv venv

venv\Scripts\activate

pip install sentence-transformers faiss-cpu numpy einops pandas

pip install llama-cpp-python --extra-index-url https://abetlen.github.io/llama-cpp-python/whl/cu121RAG用のデータセットをpickleに変換する

よくわからんがRAGというのはLLMが使えるカンニングペーパーみたいなやつらしい。

pickleGEN.py

import pandas as pd

# Parquetファイルを読み込む

df = pd.read_parquet("hf://datasets/isek-ai/danbooru-wiki-2024/data/train-00000-of-00001.parquet")

# DataFrameをPickle形式で保存

df.to_pickle("danbooru-wiki-2024_df.pkl")python pickleGEN.py推論コード

tagGEN.py

初回だけRAG(faissファイル?)を作るのに30分ほどかかります。

import csv

from pathlib import Path

import re

from sentence_transformers import SentenceTransformer

import faiss

from llama_cpp import Llama

import numpy as np

import pandas as pd

import os

# model_pathをグローバル変数として定義

model_path = "gemma-2-9b-it-Q6_K.gguf"

class CustomEmbeddings:

def __init__(self, model):

self.model = model

def embed_documents(self, texts):

return np.array(self.model.encode(texts), dtype=np.float32)

def embed_query(self, text):

return np.array(self.model.encode([text]), dtype=np.float32)

def load_danbooru_tags(file_path):

df = pd.read_pickle(file_path)

for _, row in df.iterrows():

yield (row['title'], row['body'], row['other_names'])

def create_vector_store(tags_generator):

model = SentenceTransformer("nomic-ai/nomic-embed-text-v1.5", trust_remote_code=True)

embeddings = CustomEmbeddings(model)

documents = []

vectors = []

for tag, text, other_names in tags_generator:

doc = f"{tag}: {text} (Other names: {', '.join(other_names) if isinstance(other_names, list) else other_names})"

documents.append(doc)

vectors.append(embeddings.embed_documents([doc])[0])

index = faiss.IndexFlatL2(len(vectors[0]))

index.add(np.array(vectors))

return index, documents, embeddings

# RAGシステムのセットアップ

def setup_rag_system(index, documents, embeddings, num_of_ref_tags=20):

llm = Llama(model_path=model_path, n_ctx=8192, n_batch=512)

def retrieve(query, k=num_of_ref_tags):

query_vector = embeddings.embed_query(query)

D, I = index.search(query_vector, k)

return [documents[i] for i in I[0]]

def generate_response(query, context):

prompt = f"""

You are a Danbooru tag matcher. Find exact matches in the context for the input.

Rules:

1. Return only exact matches from the context.

2. Check main tags and "Other names".

3. If no match, return input as is.

4. Provide one tag output per input.

5. Match tag names or other names, not descriptions.

6. For character counts and groups:

- Use 'solo' for a single character (human, humanoid, or non-humanoid)

- Use '1boy' for a single male character or humanoid male-presenting character (including orcs, elves, etc.)

- Use '1girl' for a single female character or humanoid female-presenting character

- Use '1other' for a single non-humanoid character

- For other character counts, use appropriate tags like '2boys', 'multiple_girls', etc.

7. Prioritize character count and group tags over other matches if applicable.

8. When using '1boy', '1girl', or '1other', also include the 'solo' tag.

9. Do not use square brackets or double square brackets around tags.

10. Match all relevant tags, including those for background, clothing, expressions, and actions.

11. For humanoid characters like orcs, elves, etc., use appropriate character count tags ('1boy', '1girl', etc.) along with their species tag.

Context: {', '.join(context)}

Input: {query}

Output:

"""

response = llm(prompt, max_tokens=50, stop=["\n"])

cleaned_response = re.sub(r'\[+|\]+', '', response["choices"][0]["text"].strip())

# Additional logic to ensure '1boy' is included for male humanoid characters

if 'orc' in query.lower() and 'male' in query.lower() and '1boy' not in cleaned_response:

cleaned_response = '1boy, ' + cleaned_response

if '1boy' in cleaned_response and 'solo' not in cleaned_response:

cleaned_response = 'solo, ' + cleaned_response

return cleaned_response

return retrieve, generate_response # この行を追加

def generate_rich_description(scene_description):

llm = Llama(model_path=model_path, n_ctx=8192, n_batch=512, tensor_split=[48, 0, 0])

prompt = f"""

Generate a detailed comma-separated list of up to 20 Danbooru tags for this scene. Include character count, group tags, and all relevant details:

- Use 'solo' for a single character (human, humanoid, or non-humanoid)

- Use '1boy' or '1girl' for a single human or humanoid character (including orcs, elves, etc.) along with 'solo'

- Use '1other' for a single non-humanoid character along with 'solo'

- Use appropriate tags for multiple characters ('2boys', 'multiple_girls', etc.)

- Include species tags (e.g., 'orc', 'elf') along with character count tags when applicable

Combine these tags with other relevant tags describing the scene, actions, environment, clothing, facial expressions, body types, and any other notable details.

Be comprehensive and include all relevant details from the scene description.

Scene description: {scene_description}

Tags (up to 20):

"""

response = llm(prompt, max_tokens=300, stop=["\n\n"])

return response["choices"][0]["text"].strip()

def convert_description_to_danbooru_tags(prompt_elements, retrieve, generate_response, max_tags=20):

elements = [elem.strip() for elem in prompt_elements.split(",")][:max_tags]

danbooru_tags = []

seen = set()

for element in elements:

context = retrieve(element)

tag = generate_response(element, context)

# Remove ** from the tag

tag = re.sub(r'\*+', '', tag)

if tag and tag not in seen:

danbooru_tags.append(tag)

seen.add(tag)

print(f"{element}: {tag}")

return ", ".join(danbooru_tags)

if __name__ == "__main__":

# model_pathからベース名を取得し、拡張子を.faissに変更

base_name = os.path.splitext(os.path.basename(model_path))[0]

vector_store_path = Path(f"{base_name}.faiss")

if vector_store_path.exists():

index = faiss.read_index(str(vector_store_path))

tags = load_danbooru_tags("danbooru-wiki-2024_df.pkl")

model = SentenceTransformer("nomic-ai/nomic-embed-text-v1.5", trust_remote_code=True)

embeddings = CustomEmbeddings(model)

# ここで documents を再作成

documents = [f"{tag}: {text} (Other names: {', '.join(other_names) if isinstance(other_names, list) else other_names})"

for tag, text, other_names in tags]

else:

tags = load_danbooru_tags("danbooru-wiki-2024_df.pkl")

index, documents, embeddings = create_vector_store(tags)

faiss.write_index(index, str(vector_store_path))

retrieve, generate_response = setup_rag_system(index, documents, embeddings, num_of_ref_tags=20)

scene_description = "一人の男、orc、筋肉質、赤面、警察官の制服、黒いネクタイ、青いシャツ、長袖、敬礼、牙、短髪、髭、シンプルな背景、白背景。色のついた肌。緑色の肌"

prompt_elements = generate_rich_description(scene_description)

print(f"Generated Danbooru Tags:\n{prompt_elements}")

danbooru_tags = convert_description_to_danbooru_tags(prompt_elements, retrieve, generate_response)

print(f"\nMatched Danbooru Tags:\n{danbooru_tags}")

# リソースの解放

del index

del retrieve

del generate_responsepython tagGEN.py結果

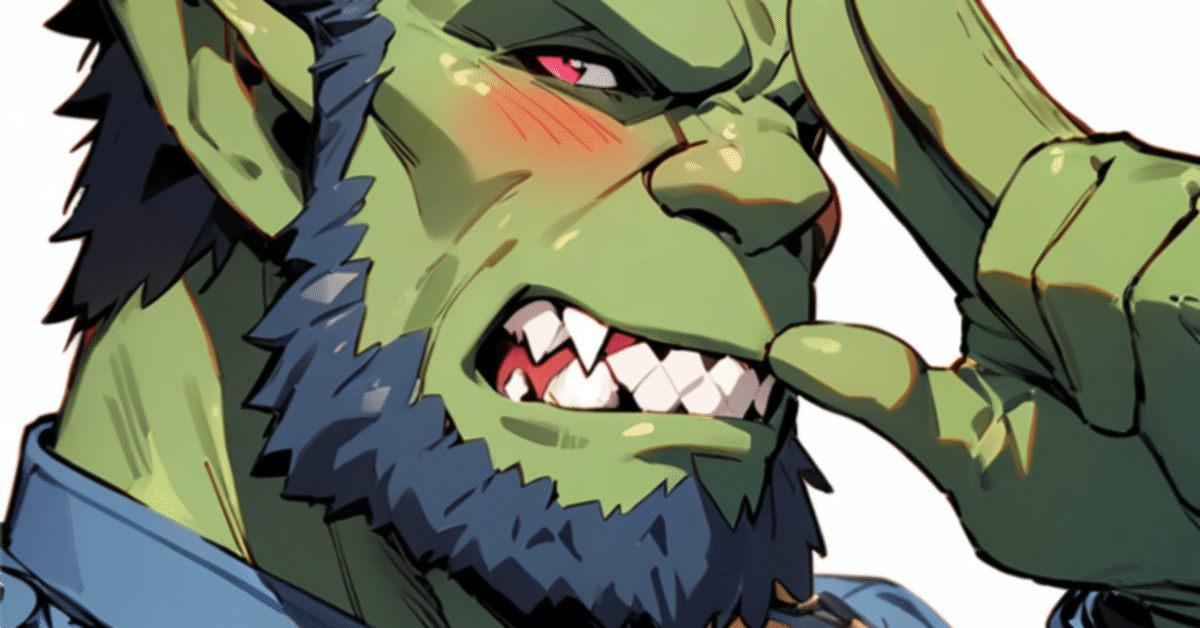

入力:一人の男、orc、筋肉質、赤面、警察官の制服、黒いネクタイ、青いシャツ、長袖、敬礼、牙、短髪、髭、シンプルな背景、白背景。色のついた肌。緑色の肌

出力:solo, 1boy, solo, orc, muscular, blush, police, uniform, black_necktie, blue_shirt, long_sleeves, straight-arm salute, fangs, short_hair, beard, white_background, simple_background, green_skin

うーん、まだ改良の余地はありますが結構いい感じなような?

もっと性能をよくするにはモデルの性能を良いものにしたり、

直接の答えではないですが wiki内の[[]]で囲まれた部分は他のタグへのリンクですので、簡単に関連するタグのグラフを作れます これはLLMを用いる必要すらなく、簡単に追加の知識として近傍タグを渡すことができます 単に最近傍だけ抽出して知識に足すだけでも良いです

とのことですが、今の僕には理解できないので賢い人に任せます。

検証にご協力いただいた、Plat様、かみやま様、Kohya様(順不同)、本当にありがとうございました!

この記事が気に入ったらサポートをしてみませんか?