OpenInterpreter / ついにAIがガチのアシスタントに!これは凄い、というか凄すぎる

凄いものが出てきてしまった。

ChatGPTの「Code Interpreter」が話題になったが、あれはあくまでクラウド上で動いているだけ。それを模してローカルで動作するようになった「Open Interpreter」は、衝撃的な成果である。

Open Interpreterのインストールは簡単。コマンド一発だ

$ pip install open-interpreter起動も簡単

$ interpreter -yこれだけでOK。

あとはなんでもやってくれる。

たとえばどんなことができるのかというと、「AppleとMetaの株価の推移をグラフ化してくれ」と言うとネットから自動的に情報をとってきてPythonコード書いてグラフをプロットしてくれる。

凄いのは、ローカルで動くのでたとえばApplescriptを使ってmacOSで動いているアプリを直接起動したり操作したりできる。「Keynoteでこういうスライドを作って」と言うとなかなかいい線までいくく

うまくプロンプトを考えれば相当なことができるので、試しにWebブラウザを操作して情報を取ってこれるかやってみた。

OpenAI APIキーを入力する場面があるが、入力しない場合llama2などを自動的に取ってくる。優れものすぎる

$ interpreter -y

Welcome to Open Interpreter.

────────────────────────────────────────────────────────────────────────────────

▌ OpenAI API key not found

To use GPT-4 (recommended) please provide an OpenAI API key.

To use Code-Llama (free but less capable) press enter.

────────────────────────────────────────────────────────────────────────────────

OpenAI API key: *************************************

Tip: To save this key for later, run export OPENAI_API_KEY=your_api_key on

Mac/Linux or setx OPENAI_API_KEY your_api_key on Windows.

────────────────────────────────────────────────────────────────────────────────

> open chrome with selenium without headless,and go to yahoo.co.jp, using driver = webdriver.Chrome() するとこんな感じになる

To achieve this, we need to follow these steps:

1 Install selenium package if it's not already installed.

2 Import necessary modules from selenium.

3 Initialize the Chrome webdriver.

4 Open yahoo.co.jp in Chrome.

Let's start by installing selenium.

pip install selenium█

pip install selenium█

Requirement already satisfied: selenium in

pip install selenium█

Requirement already satisfied: selenium in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(4.12.0)

Requirement already satisfied: urllib3<3,>=1.26 in

pip install selenium█

Requirement already satisfied: selenium in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(4.12.0)

Requirement already satisfied: urllib3<3,>=1.26 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (1.26.16)

Requirement already satisfied: trio~=0.17 in

pip install selenium█

Output truncated. Showing the last 2000 characters.

conda3-4.7.12/envs/ldm310/lib/python3.10/site-packages (4.12.0)

Requirement already satisfied: urllib3<3,>=1.26 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (1.26.16)

Requirement already satisfied: trio~=0.17 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (0.22.2)

Requirement already satisfied: trio-websocket~=0.9 in

pip install selenium█

Output truncated. Showing the last 2000 characters.

ite-packages (from selenium) (1.26.16)

Requirement already satisfied: trio~=0.17 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (0.22.2)

Requirement already satisfied: trio-websocket~=0.9 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (0.10.4)

Requirement already satisfied: certifi>=2021.10.8 in

pip install selenium█

Output truncated. Showing the last 2000 characters.

ite-packages (from selenium) (1.26.16)

Requirement already satisfied: trio~=0.17 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (0.22.2)

Requirement already satisfied: trio-websocket~=0.9 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (0.10.4)

Requirement already satisfied: certifi>=2021.10.8 in

pip install selenium

Output truncated. Showing the last 2000 characters.

ite-packages (from selenium) (1.26.16)

Requirement already satisfied: trio~=0.17 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (0.22.2)

Requirement already satisfied: trio-websocket~=0.9 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (0.10.4)

Requirement already satisfied: certifi>=2021.10.8 in

pip install selenium

Output truncated. Showing the last 2000 characters.

ite-packages (from selenium) (1.26.16)

Requirement already satisfied: trio~=0.17 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (0.22.2)

Requirement already satisfied: trio-websocket~=0.9 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (0.10.4)

Requirement already satisfied: certifi>=2021.10.8 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from selenium) (2023.5.7)

Requirement already satisfied: attrs>=20.1.0 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from trio~=0.17->selenium) (23.1.0)

Requirement already satisfied: sortedcontainers in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from trio~=0.17->selenium) (2.4.0)

Requirement already satisfied: idna in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from trio~=0.17->selenium) (3.4)

Requirement already satisfied: outcome in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from trio~=0.17->selenium) (1.2.0)

Requirement already satisfied: sniffio in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from trio~=0.17->selenium) (1.3.0)

Requirement already satisfied: exceptiongroup>=1.0.0rc9 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from trio~=0.17->selenium) (1.1.3)

Requirement already satisfied: wsproto>=0.14 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from trio-websocket~=0.9->selenium) (1.2.0)

Requirement already satisfied: PySocks!=1.5.7,<2.0,>=1.5.6 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from urllib3<3,>=1.26->selenium) (1.7.1)

Requirement already satisfied: h11<1,>=0.9.0 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from wsproto>=0.14->trio-websocket~=0.9->selenium) (0.14.0)

Selenium has been installed successfully. Now, let's import the necessary

modules from selenium and initialize the Chrome webdriver. Please note that

this requires the ChromeDriver executable to be in the system PATH. If it's

not, we'll need to specify its location directly.

Also, please note that running this code will open a new Chrome window on

your machine.

from selenium import webdriver

# Initialize the Chrome webdriver

driver = webdriver.Chrome()

# Open yahoo.co.jp in Chrome

driver.get('https://www.yahoo.co.jp/')

The code has been executed successfully. A new Chrome window should have

opened on your machine, and it should be displaying the homepage of

yahoo.co.jp. Please check your machine to confirm this.

SeleniumというPythonから操作できるWebブラウザを自動的にインストールし、yahoo.co.jpを開いた。

次に入力フィールドを発見させる

find input field with selenium 4.1.0

To find an input field on a webpage using Selenium, we can use various

methods such as finding the element by its name, id, class name, or xpath.

However, without knowing the specific input field you're looking for on

yahoo.co.jp, it's hard to provide an exact code.

As a general example, here's how you can find an input field by its name:

input_field = driver.find_element_by_name('input_field_name')

If you could provide more details about the specific input field you're

looking for, I could provide a more accurate code. 「seleniumにはスクレイピングする機能がありません」というので、やり方を教える

「yahoo.co.jpに直接アクセスして入力フィールドの名前を取得しろ」

> access to yahoo.co.jp directly and find name of input field

To find the name of the input field on the yahoo.co.jp homepage, we can

inspect the webpage's HTML.

Here's the plan:

1 Open yahoo.co.jp in a web browser.

2 Right-click on the search box and select "Inspect" or "Inspect Element"

to open the developer tools.

3 In the developer tools, find the highlighted line that corresponds to the

search box. It should be an <input> element.

4 Look for the name attribute of this <input> element. The value of this

attribute is the name of the input field.

Please note that this process involves manual steps that you need to perform

in your web browser. I can guide you through the steps, but I can't perform

them for you.

Let's start with step 1. Please open yahoo.co.jp in your web browser.

> you can do it, please make http request to yahoo.co.jp and analyze html immediately

Alright, let's fetch the HTML content of yahoo.co.jp and analyze it to find

the name of the input field.

Here's the plan:

1 Make an HTTP GET request to yahoo.co.jp to fetch the HTML content.

2 Parse the HTML content to find the input fields.

3 Print the names of the input fields.

Let's start with step 1. We'll use the requests library to make the HTTP

request, and the BeautifulSoup library to parse the HTML content. If these

libraries are not installed, we'll need to install them first.

pip install requests beautifulsoup4

Requirement already satisfied: requests in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(2.31.0)

Requirement already satisfied: beautifulsoup4 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(4.12.2)

Requirement already satisfied: charset-normalizer<4,>=2 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from requests) (3.2.0)

Requirement already satisfied: idna<4,>=2.5 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from requests) (3.4)

Requirement already satisfied: urllib3<3,>=1.21.1 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from requests) (1.26.16)

Requirement already satisfied: certifi>=2017.4.17 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from requests) (2023.5.7)

Requirement already satisfied: soupsieve>1.2 in

./.pyenv/versions/miniconda3-4.7.12/envs/ldm310/lib/python3.10/site-packages

(from beautifulsoup4) (2.4.1)

The requests and BeautifulSoup libraries have been installed successfully.

Now, let's fetch the HTML content of yahoo.co.jp and parse it to find the

input fields.

import requests

from bs4 import BeautifulSoup

# Make an HTTP GET request to yahoo.co.jp

response = requests.get('https://www.yahoo.co.jp/')

# Parse the HTML content

soup = BeautifulSoup(response.text, 'html.parser')

# Find the input fields

input_fields = soup.find_all('input')

# Print the names of the input fields

for field in input_fields:

print(field.get('name'))

p

fr

ei

ts

aq

The names of the input fields on the yahoo.co.jp homepage are 'p', 'fr',

'ei', 'ts', and 'aq'.

The search box is typically associated with the input field named 'p'. You

can use this name to find the search box using Selenium. Here's how you can

do it:

search_box = driver.find_element_by_name('p')

Please replace 'p' with the name of the input field you're interested in if

it's not the search box.

すると入力ボックスの名前がpだと突き止める。

次に、これをseleniumブラウザの情報と突合しろと命じる

> use selenium to get input field from that information

Now that we know the name of the search box input field is 'p', we can use

Selenium to find this input field on the yahoo.co.jp homepage.

Here's the plan:

1 Use the find_element_by_name method of the Selenium webdriver to find the

input field with the name 'p'.

2 Print the type of the found element to confirm that we've found the

correct element.

Let's execute this plan.

# Find the input field with the name 'p'

search_box = driver.find_element_by_name('p')

# Print the type of the found element

type(search_box)

File "<stdin>", line 4, in <module>

AttributeError: 'WebDriver' object has no attribute 'find_element_by_name'

It seems like there was an error because the 'WebDriver' object does not

have a 'find_element_by_name' attribute. This might be due to changes in the

Selenium API in version 4.1.0.

In Selenium 4.1.0, the 'find_element_by_*' methods have been deprecated and

replaced with the 'find_element' method, which takes two arguments: a

locator strategy and a locator value.

Let's modify the code to use the new 'find_element' method with the 'by'

locator strategy and 'name' as the locator value.

from selenium.webdriver.common.by import By

# Find the input field with the name 'p'

search_box = driver.find_element(By.NAME, 'p')

# Print the type of the found element

type(search_box)

<class 'selenium.webdriver.remote.webelement.WebElement'>

The code has been executed successfully. The type of the found element is

'selenium.webdriver.remote.webelement.WebElement', which confirms that we've

found the correct element. This WebElement represents the search box input

field on the yahoo.co.jp homepage. You can interact with this element using

various methods provided by Selenium, such as send_keys to enter text into

the search box, or submit to submit the form. そしたら、サーチボックスに「ビッグモーター」と入力してリターンキーのキーコードを送れと指示する

> set keyword to "ビッグモーター",then send return key code to it

To set the keyword to "ビッグモーター" and send the return key code to the

search box, we can use the send_keys method of the WebElement. This method

can take multiple arguments, which are the sequences of keys to send to the

element.

Here's the plan:

1 Use the send_keys method to enter the keyword "ビッグモーター" into the

search box.

2 Use the send_keys method again to send the return key code to the search

box.

Let's execute this plan. We'll need to import the Keys class from

selenium.webdriver.common.keys to be able to send the return key code.

from selenium.webdriver.common.keys import Keys

# Set the keyword to 'ビッグモーター'

search_box.send_keys('ビッグモーター')

# Send the return key code to the search box

search_box.send_keys(Keys.RETURN)

The code has been executed successfully. The keyword "ビッグモーター" should

have been entered into the search box, and the form should have been

submitted by sending the return key code. As a result, the browser should

now be displaying the search results for "ビッグモーター" on yahoo.co.jp.

Please check your browser to confirm this.

するとサーチボックスに「ビッグモーター」と入力された!震えるね

さらに「ニュースという単語を含むリンクを探せ」と命じる

> find a link it contains 'ニュース'

To find a link that contains the text 'ニュース', we can use the

find_element method of the Selenium webdriver with the 'by' locator strategy

and 'link text' or 'partial link text' as the locator value.

Here's the plan:

1 Use the find_element method to find the link with the text 'ニュース'.

2 Print the href attribute of the found link to confirm that we've found

the correct link.

Let's execute this plan.

# Find the link with the text 'ニュース'

link = driver.find_element(By.PARTIAL_LINK_TEXT, 'ニュース')

# Print the href attribute of the found link

link.get_attribute('href')

'https://news.yahoo.co.jp/search/?p=%E3%83%93%E3%83%83%E3%82%B0%E3%83%A2%E3%

83%BC%E3%82%BF%E3%83%BC&fr=top_ga1_sa&ei=UTF-8&ts=6703&aq=-1&x=nl'

The link that contains the text 'ニュース' has been found successfully. The

href attribute of the link is

'https://news.yahoo.co.jp/search/?p=%E3%83%93%E3%83%83%E3%82%B0%E3%83%A2%E3%

83%BC%E3%82%BF%E3%83%BC&fr=top_ga1_sa&ei=UTF-8&ts=6703&aq=-1&x=nl'. This URL

leads to the search results for "ビッグモーター" in the news section of

yahoo.co.jp.

するとちゃんとビッグモーター関係のニュースのページのURLを取得した。

次に「そのURLに行け」と命じる

> go to that url

To navigate to the URL of the found link, we can use the get method of the

Selenium webdriver. This method takes a URL as an argument and navigates the

browser to that URL.

Here's the plan:

1 Use the get method to navigate to the URL of the found link.

Let's execute this plan.

# Navigate to the URL of the found link

driver.get(link.get_attribute('href'))

The code has been executed successfully. The browser should now be

displaying the page at the URL of the found link, which is the search

results for "ビッグモーター" in the news section of yahoo.co.jp. Please

check your browser to confirm this.

するとビッグモーターに関するニュース一覧ページが表示された

そして「ビッグモーターという単語を含むリンクへ飛べ」と命じる

> find a link contains “ビッグモーター” and goto that page

To find a link that contains the text "ビッグモーター" and navigate to that

page, we can use the find_element method of the Selenium webdriver to find

the link, and the get method to navigate to the URL of the found link.

Here's the plan:

1 Use the find_element method to find the link with the text

"ビッグモーター".

2 Use the get method to navigate to the URL of the found link.

Let's execute this plan.

# Find the link with the text 'ビッグモーター'

link = driver.find_element(By.PARTIAL_LINK_TEXT, 'ビッグモーター')

# Navigate to the URL of the found link

driver.get(link.get_attribute('href'))

The code has been executed successfully. The browser should now be

displaying the page at the URL of the found link, which contains the text

"ビッグモーター". Please check your browser to confirm this.

するとページにジャンプする

「そのページのメインコンテンツを抽出しろ」と命令

> find main contents of that page using beautifulsoup

To find the main contents of the page using BeautifulSoup, we first need to

fetch the HTML content of the current page using Selenium, and then parse it

using BeautifulSoup.

Here's the plan:

1 Use the page_source attribute of the Selenium webdriver to fetch the HTML

content of the current page.

2 Parse the HTML content using BeautifulSoup.

3 Find the main contents of the page. The main contents are usually

contained in a <main> tag, or a <div> tag with a class like

"main-content" or "content". Without knowing the exact structure of the

page, it's hard to provide an exact code. As a starting point, let's try

to find a <main> tag.

Let's execute this plan.

# Fetch the HTML content of the current page

html = driver.page_source

# Parse the HTML content

soup = BeautifulSoup(html, 'html.parser')

# Find the main contents of the page

main_content = soup.find('main')

# Print the main content

print(main_content)█ 「ビッグモーターに関する情報を抽出しろ」と命令

> find content about "ビッグモーター"

To find content about "ビッグモーター", we can search the text content of

the main content for occurrences of the string "ビッグモーター".

Here's the plan:

1 Use the find_all method of the BeautifulSoup object to find all elements

whose text contains "ビッグモーター".

2 Print the text content of the found elements.

Let's execute this plan.

# Find all elements whose text contains 'ビッグモーター'

elements = soup.body(text='ビッグモーター')

# Print the text content of the found elements

for element in elements:

print(element)

It seems like there was no content containing "ビッグモーター" found on the

page. This could be due to several reasons:

1 The content might be loaded dynamically after the initial page load, and

Selenium might not have captured it when fetching the page source.

2 The content might be contained in an HTML attribute rather than the text

content of an element.

3 The content might be contained in a part of the page that we didn't

search. We searched the entire body of the page, but the content might be

contained in a specific part of the page.

Without knowing the exact structure of the page, it's hard to provide an

exact solution. However, we can try a different approach: instead of

searching the text content of the entire body, let's search the text content

of the main content only. Let's execute this plan.

# Find all elements whose text contains 'ビッグモーター' in the main content

elements = main_content(text='ビッグモーター')

# Print the text content of the found elements

for element in elements:

print(element)

It seems like there was still no content containing "ビッグモーター" found

in the main content of the page. This could be due to the reasons mentioned

earlier.

Another possible reason is that the text "ビッグモーター" might be part of a

larger string, and the text parameter of the find_all method might not be

able to find it. We can try using a regular expression to search for any

string that contains "ビッグモーター".

Let's execute this plan.

import re

import re

import re

import re

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

elements = main_content(text=bigmotor_regex)

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

elements = main_content(text=bigmotor_regex)

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

elements = main_content(text=bigmotor_regex)

# Print the text content of the found elements

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

elements = main_content(text=bigmotor_regex)

# Print the text content of the found elements

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

elements = main_content(text=bigmotor_regex)

# Print the text content of the found elements

for element in elements:

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

elements = main_content(text=bigmotor_regex)

# Print the text content of the found elements

for element in elements:

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

elements = main_content(text=bigmotor_regex)

# Print the text content of the found elements

for element in elements:

print(element)█

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

elements = main_content(text=bigmotor_regex)

# Print the text content of the found elements

for element in elements:

print(element)█

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

elements = main_content(text=bigmotor_regex)

# Print the text content of the found elements

for element in elements:

print(element)

import re

# Find all elements whose text contains 'ビッグモーター' in the main content

bigmotor_regex = re.compile('.*ビッグモーター.*')

elements = main_content(text=bigmotor_regex)

# Print the text content of the found elements

for element in elements:

print(element)

ビッグモーターが岐阜・大垣の新店舗オープン取りやめ 今年11月開業予定

ビッグモーターが今年11月にオープンを予定していた岐阜県大垣市の店舗について

、出店を取りやめたことが分かりました。

販売大手のビッグモーターでは、保険金の不正請求や店舗前の街路樹が枯れているこ

となどが問題となっています。

これを受けてビッグモーターは、これまで被害にあった客や関係者への対応を優先す

ため、今年11月に新たにオープン予定だった大垣店の出店を取りやめるということ

です。

ホームページにオープン予定として掲載されていた店舗情報については、今月5日に

すでに削除されています。

名東区の店舗は「5月に1回除草剤を使用した」

内部調査の結果をビッグモーターが名古屋市に報告

「除草剤をまいていた」ビッグモーターが一宮市に説明 原状回復に向け協議へ

ビッグモーター西尾店前の街路樹が枯れた問題

愛知県が警察に被害届を提出、除草剤の成分検出

ビッグモーター店舗前の街路樹問題で警察に被害相談

名古屋市、4店舗前で「除草剤の成分検出」

ビッグモーター前から除草剤の成分を検出 名古屋市の4店舗で

ビッグモーター、9月オープン新店舗の開業も白紙…「経営状況悪化」が止まらない!

ビッグモーター、福島・岐阜の2店舗出店取りやめ 「被害対応優先」

16日オープン予定が...出店白紙に ビッグモーターいわき平塩店

ビッグモーター出店白紙に 福島、岐阜の2店

【独自】「死刑にします!」「殺してほしいの?」ビッグモーター元従業員が語る“暴

メッセージ”と“除草剤散布”

ビッグモーター、2店舗の出店白紙に

ビッグモーターが全社員に「情報出すな」と誓約書…「違反なら被った損害を賠償」

ビッグモーターが岐阜・大垣の新店舗オープン取りやめ 今年11月開業予定

The content related to "ビッグモーター" has been found and printed

successfully. It includes several pieces of text that mention

"ビッグモーター", which seem to be related to news articles or headlines.

Please note that the printed content is just the text that contains

"ビッグモーター". If you want to extract the full content of the articles or

sections that mention "ビッグモーター", you might need to refine the

BeautifulSoup code to target the specific HTML tags or classes that contain

the articles or sections.

内容を要約してもらう

ここまで来れば日本語でいけそう

> 要約せよ

Based on the extracted content, it appears that the main topic is about a

company called "ビッグモーター" (Big Motor). Here's a summary:

• Big Motor, a major sales company, has decided to cancel the opening of a

new store in Ogaki, Gifu Prefecture, which was scheduled for November

this year.

• The company has been facing issues such as fraudulent insurance claims

and the problem of street trees in front of their stores dying.

• In response to these issues, Big Motor has decided to prioritize dealing

with affected customers and stakeholders, leading to the cancellation of

the new store opening.

• Information about the store, which was listed as "opening soon" on their

website, has already been removed as of the 5th of this month.

• There are also mentions of issues related to the use of herbicides at

their stores, which seems to be a contributing factor to the problems the

company is facing.

Please note that this is a rough summary based on the extracted content. For

a more accurate and detailed summary, it would be best to read the full

articles or content. ずっと英語で喋ってきたので英語で要約されたので、もう一回「日本語で要約しろ」と言ってみる

> 日本語で要約しろ

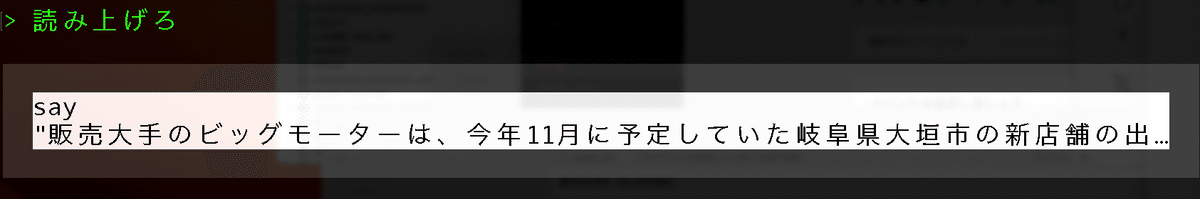

抽出されたコンテンツに基づいて、主な話題は「ビッグモーター」という会社につい

てのようです。以下に要約を示します:

• 販売大手のビッグモーターは、今年11月に予定していた岐阜県大垣市の新店舗

の出店を取りやめることを決定しました。

• この会社は、保険金の不正請求や店舗前の街路樹が枯れるという問題など、いく

つかの問題に直面しています。

• これらの問題に対応するため、ビッグモーターは被害に遭った顧客や関係者への

対応を優先することにし、その結果、新たにオープン予定だった大垣店の出店を

取りやめることになりました。

• 「近日オープン予定」としてホームページに掲載されていた店舗情報は、すでに

月5日に削除されています。

• また、店舗での除草剤の使用に関連する問題も言及されており、これが会社が直

面している問題に寄与しているようです。

この要約は抽出されたコンテンツに基づいており、より正確で詳細な要約を得るため

には、全文またはコンテンツを読むことをお勧めします。 日本語で要約された。

読み上げさせてみる。

読み上げたよ!!!

すげえ!

使ってみた感想としては、「大学生のインターンの上の中」くらいの感覚。

抽象的すぎる指示は無限ループに陥り無駄金(GPT利用料金)を取られるが、具体的な指示に対する反応は早く、場合によっては僕よりもドメイン知識に詳しい(僕はそんなにbeautifulsoupもseleniumも詳しくない)。

何より反応が早い。

これまで大学生のインターンに午前中に指示を出して、昼ごろに確認して指示を出して夕方確認する、という、「3コマンド」しか投げられなかったのだが、Open Interpreterは一時間で10コマンドくらい投げられる。圧倒的なスピードの差がある。

使いこなすにはコツが必要だが、これは本当に大学生のインターンを使ってる感覚に近いので、ガチで「アシスタント」という気がする。本物の大学生の場合、これに加えて「この新しい技術勉強しといて」と言ったりするのだが、それもそのうちできるようになるのかもしれない(今のところそれをするには大規模なVRAMが必要だが)。

推論速度に難があるが、これもいずれ解決されるだろうから、マジで世の中変わってくる予感がする。