The challenges of SLAM for Pretia AR Cloud

What is SLAM?

A methodology that can let the robots (or devices) understand the spatial information of the environment through sensors, e.g. knows the relative pose between the robot (or device) and a “landmark“

A few application examples of SLAM: cleaning robots, AR / VR head mount devices and warehouse automation robotis

In the AR Cloud project, we acheive SLAM by using the visual (or camera) method since it aims at the mobile market.

Why AR Cloud project needs SLAM?

Let’s have a quick overview of the AR Cloud project features

Attach the virtual objects to the environment

Interact with the virtual objects in the environment

Share the status of these virtual objects with others

Every feature is based on one thing – environment understanding

For example, if the device has already got the spatial information of the environment, it can attach the virtual object to the correct position, since it knows the correct relative pose between the device and the virtual object (or landmark).

In short, the AR Cloud project utilizes the SLAM technology to understand spatial information so that it can put a virtual object into the real-world (/environment), which achieves the AR purpose. Since we are aiming at the mobile phones market and most mobile phones only have a single camera, our SLAM solution is a Monocular Visual SLAM solution

The challenges of SLAM in the AR Cloud project

Even though AR Cloud utilized SLAM to achieve the AR purpose, there are still some issues that decrease the performance of the SLAM solution in the ÅR Cloud project

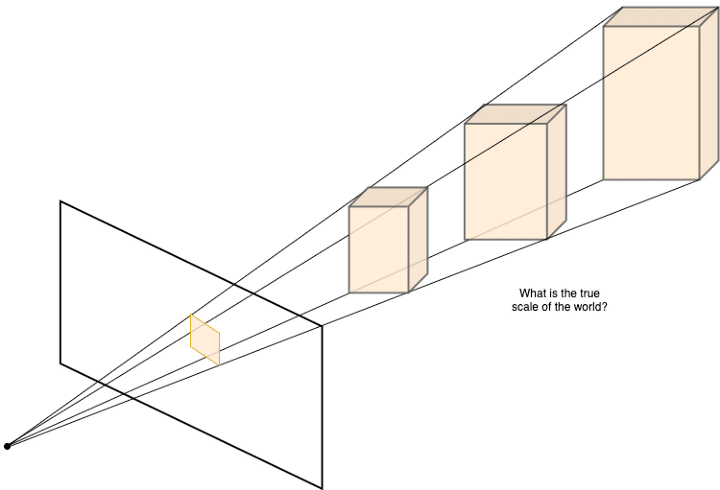

Absolute Scale Recovery

This issue always happens in pure monocular visual SLAM solutions. Since a single camera configuration can not recover the scale. (P.S. a stereo pair camera module can recover the scale)

Also when we use this uncertainty scale in tracking, the error of this uncertainty scale will propagate to other landmarks and camera poses, and this error will propagate endless, which means the SLAM system will not converge over time.

So, how to solve this issue? Here we list 2 popular ideas for recovering the absolute scale as below

From IMU sensors (traditional methodology)

Reading the acceleration information from a sensor, we can accumulate the acceleration to velocity the to translation

Recover the absolute scale by comparing the camera moving and the translation from IMU

From camera (/2D image) reference objects (modern methodology)

Detect semantic object(s) from 2D image. e.g. a table, a sofa, or maybe a window

Then refer to the knowledge database to have an initial guess of the object size

Recover the absolute scale by comparing the 2D image semantic object and the initial guess of the object size

Relocalization

Imagine that your friends are playing an AR game in a place (/spot), and you want to join them. How does your mobile handle this scenario?

Since your friends already had the spatial information of the environment (your friends or the game developers had already scanned the environment), you don’t need to scan the environment but just join them, this process is the so-called “Relocalization“ in the SLAM field.

In short, the behavior is for someone (or some device) to know where it is by referring existed map (/known spatial information of the environment)

Environment changing (lighting changing, moving objects … etc)

If the spatial information of the environment is static, then the relocalization behavior will work well; but what if the spatial information of the environment is dynamic? e.g. A scene with too many uncertain objects or environmental factors

Map merging/Map update

Now, we are building a giant AR playground, can we scan the environment without any interruption? or without any help?

Then consider another scenario, we have already scanned an environment for example a coffee shop. Then after a few weeks or months, the coffee shop changes part of its inside layout (moving the desk and chairs), of cause, we need to scan again, but can we just scan the updated part and merge it into the original one?

Both the 2 scenarios require a high hit rate and flexible relocalization method.

Map score/scan guidelines

When we are scanning an environment, it would be better to have a hit or guidance to tell us which way or direction we should go so that we can have a better result.

There are 2 major challenges in this topic,

What is the definition of a “good map“?

In order to have a good map, which direction we should go?

Actually, this topic is similar to the NBV, Next Best View.

The future of SLAM in the AR Cloud project

From time to time, more and more users and developers use AR Cloud, and we might find more issues we need to solve and more features we need to implement.

For now, we have already applied some traditional computer vision and optimization algorithms to solve these issues, but still, there is a big room to improve the performance.

We are trying to introduce some modern methodologies (e.g. deep learning solutions) to solve or improve these issues. Do you have any better ideas? Join us, help us, and let us push the AR Cloud project to another level together!

About the Author

The author is currently a Computer Vision engineer at Pretia Technologies Inc. He has been working in the fields of engineering and algorithm for nearly 15 years. With more than 6 years of experience in SLAM – VSLAM and Lidar SLAM – He has applied SLAM technology in areas such as Robotics, Drones, AR, and VR. He is devoted himself to improving SLAM or related technologies and bringing them into our life.

Reference

この記事が気に入ったらサポートをしてみませんか?