Generative AI's New Internet Ecosystem

While people talk about the possibilities and harms of generative AI, I don't see much talk about the Internet's information ecosystem. So I tried to organize how the entire Internet ecosystem will change with the spread of generative AI. In a sense, this could be a hypothesis or a scenario. After all, this is a place for notes.

Characteristics of Generative AI

Generative AI has a variety of characteristics, but compared to humans, it can be said that it produces an order of magnitude greater amount of data than humans.

It can provide more plausible answers than humans, but it often makes subtle errors, and it is cumbersome to check for errors. One such example is hallucination, which is a plausible explanation for an object, event, or person that does not exist. And according to "Humans may be more likely to believe disinformation generated by AI" (https://www.technologyreview.com/2023/06/28/1075683/humans-may-be- more-likely-to-believe-disinformation-generated-by-ai/), humans are more likely to believe AI-generated information than human-generated disinformation.

This issue is summarized in "The Real Risk of Generated AI, More Worrisome than 'Human Extinction'" (https://www.technologyreview.jp/s/309833/its-time-to-talk-about-the-real-ai-risks/).

Recent studies have shown that humans are more accurate in determining the truth of content. Of course, AI can do more than humans. But they are less accurate than humans.

Personally, I think this alone is enough to be annoying, but as various companies continue to adopt it, we are surrounded by a large amount of uncertain information being generated.

The Emerging A2A Ecosystem

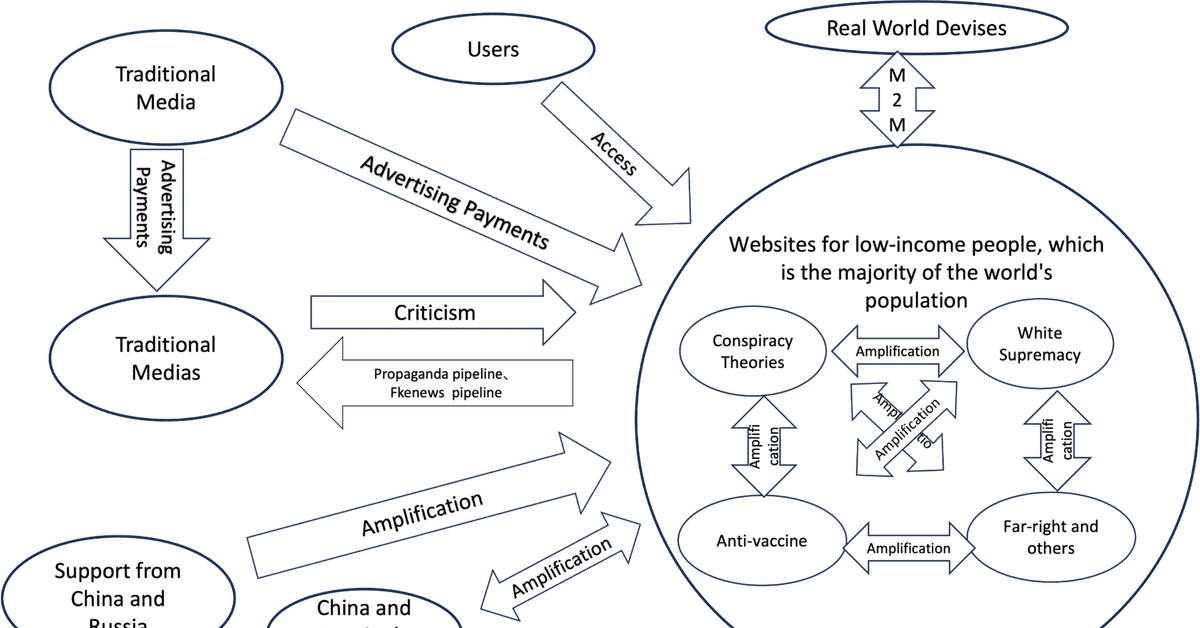

In disinformation and digital influence operations, China and Russia spread conspiracy theorist and white supremacist content in the U.S., Google and other ad tech companies fund them with ads as access increases, and Facebook and others give them preferential treatment. And by using them, conspiracy theorists and white supremacists grow their followers and arm themselves. This ecosystem is spreading across the U.S. and around the world.

In the A2A ecosystem, conspiracy theorists and white supremacists are replaced by generative AIs. The content generated by the generative AIs is distributed by other generative AIs and by China and Russia. As access increases, Google and other ad tech companies fund them with advertising, and Facebook and others give them preferential treatment. Then, using them, A2A content will increase its followers. This ecosystem will spread around the world.

The rise of AI-generated websites

Some of the major media outlets have begun to use generative AI to create articles, and sites that only use generative AI articles, which can be mass-produced cheaply for advertising revenue, have also sprung up. Some image/video sites also publish videos created by generative AI. Eventually, video news sites will emerge that use only AI-generated videos. All they need to do is generate videos from articles on major news sites. Exclusive videos (but deep fakes) that are not published on major media sites.

Generative AI content spreads invisibly

Generative AI content is likely to proliferate in invisible places other than sites that use generative AI. A large number of crowd workers accepting cheap orders have created content using generative AI.

AI is going to eat itself: Experiment shows people training bots are using bots

In Japan, the compensation for online content creators has been extremely low, so a similar situation is likely to occur. By the way, the compensation for the experiment in The Register article mentioned above was $1.00.

Individual

Advertising distribution and funding through Google.

NewsgGard recently published "Funding the Next Generation of Content Farms: Some of the World's Largest Blue Chip Brands Unintentionally Support the Spread of Unreliable AI-Generated News Websites" (https://www.newsguardtech.com/misinformation-monitor/june-2023/). Some sites averaged 1,200 articles per day. Some sites published inaccurate medical information, while others rewrote articles from other sites.

NewsGuard extracted sites based on the presence or absence of error messages from the generated AI, so it is likely that the actual number of sites is much higher.

Most of the ads were served by Google, which means that part of the ecosystem mentioned above is already in place.

Google has been a leader in funding even during the conspiracy theorists and white supremacists, and they are starting to fund this right now. Of course, Google will say they have no such intentions and that it is against policy, but that is the reality of what is happening.

NewsGuard did not mention video sites, but the same thing will happen to video sites.

Mass production of many low quality sites with conspiracy theories, racism, etc.

The current generation of AIs, such as ChatGPT, are well suited for this purpose because they are familiar with conspiracy theories and racism, and it does not matter if the content they generate is false.

As I mentioned earlier, the current media and debate has made the majority of low-income people invisible. Like Trump, right-wingers, conspiracy theorists, and racists in the U.S., it's better to provide content for the majority of low-income people to access.

If a generative AI is used to mass produce conspiracy theory and racist content, it could scale quickly. Even if it doesn't, those based on discourse for large numbers of low-income people will gain more access, and errors in content will not be an issue.

It is also only a matter of time before Chinese and Russian proxies start using generative AI.

Mutual Dissemination of Generative AI Content

So far, we have not found any sites that rewrite articles based on those created by generative AI, nor have we found any mechanism for their proliferation, but there is a strong possibility that it has already begun. Since there are sites that rewrite articles on other sites based on the original articles, it would be strange if this did not happen as the number of articles created by generative AI increases in the future.

Since the training data for the AI is taken from the Internet, the articles, images, and videos written by the AI will be incorporated into the training data and spread.

China and Russia will spread the articles that are suitable for their countries. This is the same method used to spread anti-vaccine and other claims in the wake of the coronary disaster.

As a result of this cross-pollination, some of the generated AI articles also flow into the propaganda pipeline and the fake news pipeline, spreading to mainstream and general media, including television.

What unknown M2M communication brings

The various AI-to-AI communications that have been introduced so far have not involved dedicated communication APIs or other means. In other words, current AIs have the ability to communicate with each other based on natural language and video (which is natural since they can understand natural language and video).

Until now, most M2M communications have only supported the collection, aggregation, and display of low-level machine data. And they required dedicated M2M interfaces. But that is not the case with today's AI. It can communicate over existing human-oriented interfaces. And there is no human oversight or control. A new paper by Samuel Wooley, one of the leading experts on digital influence, focuses on bot-to-bot communication and shows its importance. Chapter 52 of "BOT-TO-BOT COMMUNICATION: RELATIONSHIPS, INFRASTRUCTURE, AND IDENTITY" is a must read.

M2M communication creates endless opportunities and risks. It will be possible to connect to satellite imagery services to verify, analyze, and transmit messages 24 hours a day. It can also be connected to surveillance cameras to transmit live reports of crimes being committed and criminals being tracked in real time. Of course, it can also be exploited. It is easy to spread false information based on real information obtained from other devices. This would be effective because humans are more likely to believe lies in real information.

AI-to-AI communication and M2M communication have other possibilities. By learning the reaction patterns of others, it will provide them with information that will make them react in a way that is convenient for them. If the goal is to increase access to spread conspiracy theories and advertising, they will develop a preference for things that are more radical and get people's attention.

Elliot Higgins of Belling Cat posted images of Trump's imprisonment and escape on the Internet, and he could do the same or more in large numbers. Moreover, it could be timed to coincide with Trump's own real-time actions, based on the most recent images. Or, like yellow journalism, it could use disinformation to incite war.

The possibilities and risks of the ecosystem created by the interconnectedness of AI are unpredictable.

Generative AI learns from the content it generates.

Much of the content on the Internet will be generated by generative AI, and generative AI will continue to learn from it. The biases and other trends in the original data set will be further reinforced.

The information space on the Internet will be reorganized around the content of the generative AI.

In this way, the information space of the Internet is reorganized around the content of generative AI. The ecosystem shown in the figure existed originally, but it will be scaled up by the mass production of content created by generative AI. The presence of traditional major media will almost disappear, and the Internet will be flooded with content created by generative AI.

As an additional note, according to the recently released Reuters Digital News Report 2023, the global news situation is as follows.

Younger generations are more likely to get their news from social media, search and mobile aggregators.

While established news organizations and journalists are at the center of news stories on Facebook and Twitter, celebrities, influencers, and social network personalities are at the center of news stories on TikTok, Instagram, and Snapchat.

Trust in news continues to decline.

The percentage of respondents who access news online is also declining, as is the percentage of respondents who use television and newspapers. Frequent or occasional news avoidance is high at 36%.

In layman's terms, news and journalism as we know it is dying. The future is likely to be dominated by news generated by generative AI. Of course, pundits and journalists will resist, but that is the typical backlash of workers whose jobs are being taken over by machines, and it will not stem the tide. It is hard to do, at least as long as the debate is about the necessity and quality of news. Because it is statistically clear that conventional news is also sufficiently biased, and there is no basis for that claim. And while generative AI gets it wrong, there are many times when conventional news has also been misinformed. There are differences, of course, but they are like the difference between industrial products and handmade products, and when it comes to cost and mass production, generative AI has the upper hand.

Is the era of "multiple realities" here?

I say half-jokingly, half-seriously, that "diverse science" and "diverse reality" will become the norm in the future, but it seems to be accelerating into reality.

Diverse science" and "diverse reality" mean exactly what they say. We believe that there is one reality or fact that is certain and that we can share, but we know that there are times and regions where this is not the case. Similarly, there can be different sciences based on different facts and perceptions of reality. We know that this also varies from time to time and region to region. However, the overwhelming majority of people living in the world today believe that there is one fact, one reality, and one science that is/can be shared. This unfounded trust is the basis on which globalization and money depend, and it is one of the most important things that supports our society.

In recent years it has been crumbling, accelerated by the coronal catastrophe. Experts in different countries, claiming to be scientific, have said different things, and policies have become more diverse. And all claim that this is the right thing to do. There was also a proliferation of anti-vaccine and conspiracy theories and other voices claiming different facts and science. The coronary disaster accelerated the division of society, but it was not just a division, it was a diversification of facts, reality, and science.

Given these trends of the times, the flood of untrue information generated by generative AI is not something that happened suddenly. Therefore, it probably cannot be stopped. In the first place, people no longer want to watch news produced according to conventional standards.

As it is, climate warming cannot be stopped because countries are not acting in concert. Food and water will become scarce. Migration will increase and societies will become unstable.

There is no one way to deal with this, and we probably don't know the right answer. Different sciences and realities will produce different measures to respond to the human crisis, and any one of them may succeed and increase the probability of survival. In this light, diverse facts, realities, and sciences are not bad.

Even if it is not, it may be good to diversify once and let what remains become the next mainstream, because it will create a new era. The worst thing we can do is leave the status quo as it is, with the end in sight.

本noteではサポートを受け付けております。よろしくお願いいたします。