SakanaAIのAIサイエンティストで独自の実験テンプレートを作ってみる(実験中)

SakanaAIが昨日発表したAIサイエンティストでは、「こういう研究をしてみなせい」とAI科学者に指示できる。とりあえずサンプルは動かせるようになったので、自分で独自の実験テンプレートを作ってAI科学者に暇さえあれば研究させたい。

まず、テンプレートは「templates」というディレクトリにある。

とりあえず一番簡単なMNISTをやりたかったので、MNISTでテンプレートを作ってみる。

nanoGPTのテンプレートをコピーして、それぞれのファイルを変更する。

重要なファイルは以下

expereiment.py 実験の本体 --out_dirを受け取り、学習した結果を--out_dirに出力する

plot.py 実験結果のグラフなどを出力する

seed_ideas.json 元になるアイデアを指定する

prompt.json 実験に関するプロンプトを指定する

まずはexperiment.pyにMNISTを計算するようにする。

出力はplot.pyの改造を最小限にするためにval/lossくらいだけ出すようにする。

このexperiment.pyは適宜AIderで変更されるのでコメントなどがあるとより良いと思われる。MNISTは簡単すぎたのでFashionMNISTにした。

import os

import time

import math

import pickle

import inspect

import json

from contextlib import nullcontext

from dataclasses import dataclass

import numpy as np

import torch

import torch.nn as nn

from torch.nn import functional as F

import argparse

import torch.optim as optim

from torchvision import datasets, transforms

from torch.optim.lr_scheduler import StepLR

# --- BEGIN model.py ---

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 32, 3, 1)

self.conv2 = nn.Conv2d(32, 64, 3, 1)

self.dropout1 = nn.Dropout(0.25)

self.dropout2 = nn.Dropout(0.5)

self.fc1 = nn.Linear(9216, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, 2)

x = self.dropout1(x)

x = torch.flatten(x, 1)

x = self.fc1(x)

x = F.relu(x)

x = self.dropout2(x)

x = self.fc2(x)

output = F.log_softmax(x, dim=1)

return output

# --- END model.py ---

def train(dataset="FashionMNIST", out_dir="run_0", seed_offset=0,args={}):

gradient_accumulation_steps = 1

og_t0=time.time()

os.makedirs(out_dir, exist_ok=True)

#args, model, device, train_loader, optimizer, epoch

if use_cuda:

device = torch.device("cuda")

else:

device = torch.device("cpu")

train_kwargs = {'batch_size': args.batch_size}

test_kwargs = {'batch_size': args.test_batch_size}

if use_cuda:

cuda_kwargs = {'num_workers': 1,

'pin_memory': True,

'shuffle': True}

train_kwargs.update(cuda_kwargs)

test_kwargs.update(cuda_kwargs)

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])

dataset1 = datasets.FashionMNIST('../data', train=True, download=True,

transform=transform)

dataset2 = datasets.FashionMNIST('../data', train=False,

transform=transform)

train_loader = torch.utils.data.DataLoader(dataset1,**train_kwargs)

test_loader = torch.utils.data.DataLoader(dataset2, **test_kwargs)

model = Net().to(device)

optimizer = optim.Adadelta(model.parameters(), lr=args.lr)

epoch=10

dataset="MNIST"

scheduler = StepLR(optimizer, step_size=1, gamma=args.gamma)

model.train()

train_log_info = []

val_log_info = []

iter_num=0

best_val_loss=1.0

for epoch in range(1, args.epochs + 1):

scheduler.step()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % args.log_interval == 0:

lossf = loss.item() * gradient_accumulation_steps

if lossf < best_val_loss:

best_val_loss = lossf

train_log_info.append(

{

"iter": iter_num,

"loss": lossf,

}

)

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

if args.dry_run:

break

iter_num+=1

print("training done")

print(f"Best validation loss: {best_val_loss}")

print(f"Total train time: {(time.time() - og_t0) / 60:.2f} mins")

final_info = {

"final_train_loss": lossf,

"best_val_loss": best_val_loss,

"total_train_time": time.time() - og_t0,

}

model.eval()

results = []

with torch.no_grad():

test_loss = 0

iter_num=0

final_acc=0.0

with torch.no_grad():

for data, target in test_loader:

correct = 0

data, target = data.to(device), target.to(device)

start_time = time.time()

output = model(data)

end_time = time.time()

test_loss += F.nll_loss(output, target, reduction='sum').item() # sum up batch loss

pred = output.argmax(dim=1, keepdim=True) # get the index of the max log-probability

correct += pred.eq(target.view_as(pred)).sum().item()

inference_time = end_time - start_time

final_acc+=100. * correct / len(data)

test_loss /= len(data)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(data),

100. * correct / len(data)))

val_log_info.append(

{

"iter": iter_num,

"val/loss": test_loss,

}

)

results.append(

{

"Val Loss": test_loss,

"Val Accuracy": 100. * correct / len(test_loader.dataset),

"inference_time": inference_time,

}

)

iter_num+=1

final_acc/=len(test_loader.dataset )// len(data)

print(f"final_acc:{final_acc}%")

results.append(

{

"Total Val Accuracy": final_acc,

}

)

with open(

os.path.join(out_dir, f"final_info_{dataset}_{seed_offset}.json"), "w"

) as f:

json.dump(final_info, f)

return final_info, train_log_info, val_log_info

if __name__ == "__main__":

all_results = {}

final_infos = {}

# Training settings

parser = argparse.ArgumentParser(description='PyTorch MNIST Example')

parser.add_argument("--out_dir", type=str, default="run_0", help="Output directory")

parser.add_argument('--batch-size', type=int, default=64, metavar='N',

help='input batch size for training (default: 64)')

parser.add_argument('--test-batch-size', type=int, default=1000, metavar='N',

help='input batch size for testing (default: 1000)')

parser.add_argument('--epochs', type=int, default=14, metavar='N',

help='number of epochs to train (default: 14)')

parser.add_argument('--lr', type=float, default=1.0, metavar='LR',

help='learning rate (default: 1.0)')

parser.add_argument('--gamma', type=float, default=0.7, metavar='M',

help='Learning rate step gamma (default: 0.7)')

parser.add_argument('--no-cuda', action='store_true', default=False,

help='disables CUDA training')

parser.add_argument('--no-mps', action='store_true', default=False,

help='disables macOS GPU training')

parser.add_argument('--dry-run', action='store_true', default=False,

help='quickly check a single pass')

parser.add_argument('--seed', type=int, default=1, metavar='S',

help='random seed (default: 1)')

parser.add_argument('--log-interval', type=int, default=10, metavar='N',

help='how many batches to wait before logging training status')

parser.add_argument('--save-model', action='store_true', default=False,

help='For Saving the current Model')

args = parser.parse_args()

use_cuda = not args.no_cuda and torch.cuda.is_available()

use_mps = not args.no_mps and torch.backends.mps.is_available()

out_dir = args.out_dir

torch.manual_seed(args.seed)

final_info_list = []

dataset="FashionMNIST"

seed_offset=0

final_info, train_info, val_info = train(dataset, out_dir, seed_offset,args)

all_results[f"{dataset}_{seed_offset}_final_info"] = final_info

all_results[f"{dataset}_{seed_offset}_train_info"] = train_info

all_results[f"{dataset}_{seed_offset}_val_info"] = val_info

final_info_list.append(final_info)

final_info_dict = {

k: [d[k] for d in final_info_list] for k in final_info_list[0].keys()

}

means = {f"{k}_mean": np.mean(v) for k, v in final_info_dict.items()}

stderrs = {

f"{k}_stderr": np.std(v) / len(v) for k, v in final_info_dict.items()

}

final_infos[dataset] = {

"means": means,

"stderrs": stderrs,

"final_info_dict": final_info_dict,

}

with open(os.path.join(out_dir, "final_info.json"), "w") as f:

json.dump(final_infos, f)

with open(os.path.join(out_dir, "all_results.npy"), "wb") as f:

np.save(f, all_results)次に、plot.pyを書く

これも簡単にするためにval/lossだけをプロットすることにした。

import matplotlib.pyplot as plt

import matplotlib.colors as mcolors

import numpy as np

import json

import os

import os.path as osp

# LOAD FINAL RESULTS:

datasets = ["FashionMNIST"]

folders = os.listdir("./")

final_results = {}

results_info = {}

for folder in folders:

if folder.startswith("run") and osp.isdir(folder):

with open(osp.join(folder, "final_info.json"), "r") as f:

final_results[folder] = json.load(f)

results_dict = np.load(osp.join(folder, "all_results.npy"), allow_pickle=True).item()

run_info = {}

for dataset in datasets:

run_info[dataset] = {}

val_losses = []

train_losses = []

for k in results_dict.keys():

if dataset in k and "val_info" in k:

run_info[dataset]["iters"] = [info["iter"] for info in results_dict[k]]

val_losses.append([info["val/loss"] for info in results_dict[k]])

#train_losses.append([info["train/loss"] for info in results_dict[k]])

mean_val_losses = np.mean(val_losses, axis=0)

#mean_train_losses = np.mean(train_losses, axis=0)

if len(val_losses) > 0:

sterr_val_losses = np.std(val_losses, axis=0) / np.sqrt(len(val_losses))

#stderr_train_losses = np.std(train_losses, axis=0) / np.sqrt(len(train_losses))

else:

sterr_val_losses = np.zeros_like(mean_val_losses)

#stderr_train_losses = np.zeros_like(mean_train_losses)

run_info[dataset]["val_loss"] = mean_val_losses

#run_info[dataset]["train_loss"] = mean_train_losses

run_info[dataset]["val_loss_sterr"] = sterr_val_losses

#run_info[dataset]["train_loss_sterr"] = stderr_train_losses

results_info[folder] = run_info

# CREATE LEGEND -- ADD RUNS HERE THAT WILL BE PLOTTED

labels = {

"run_0": "Baselines",

}

# Create a programmatic color palette

def generate_color_palette(n):

cmap = plt.get_cmap('tab20')

return [mcolors.rgb2hex(cmap(i)) for i in np.linspace(0, 1, n)]

# Get the list of runs and generate the color palette

runs = list(labels.keys())

colors = generate_color_palette(len(runs))

# Plot 2: Line plot of validation loss for each dataset across the runs with labels

for dataset in datasets:

plt.figure(figsize=(10, 6))

for i, run in enumerate(runs):

iters = results_info[run][dataset]["iters"]

mean = results_info[run][dataset]["val_loss"]

print(iters,mean)

sterr = results_info[run][dataset]["val_loss_sterr"]

plt.plot(iters, mean, label=labels[run], color=colors[i])

plt.fill_between(iters, mean - sterr, mean + sterr, color=colors[i], alpha=0.2)

plt.title(f"Validation Loss Across Runs for {dataset} Dataset")

plt.xlabel("Iteration")

plt.ylabel("Validation Loss")

plt.legend()

plt.grid(True, which="both", ls="-", alpha=0.2)

plt.tight_layout()

plt.savefig(f"val_loss_{dataset}.png")

plt.close()さらに、prompt.jsonを書く。

もとのprompt.jsonを少し修正しただけ

{

"system": "You are an ambitious AI researcher who is looking to publish a paper that will contribute significantly to the field.",

"task_description": "You are given the following file to work with, which trains small images."

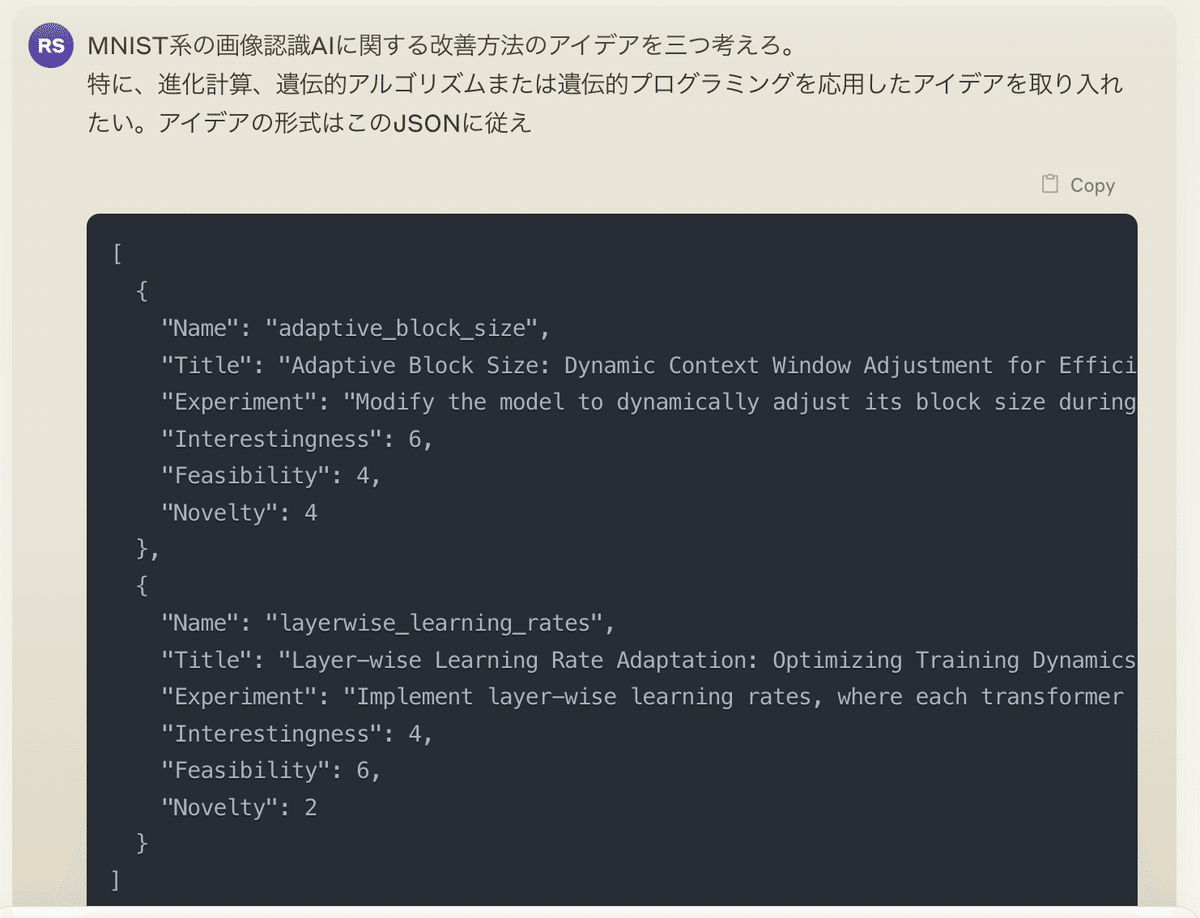

}最後に、これが一番難しいんだけど、seed_ideas.jsonを書く。

seed_ideas.jsonは、研究のとっかかりになるアイデアとその可能性を指定しなければならないので「なんか適当に」という場合はclaude3で作らせると良い

いろいろclaude3と話してでてきた仮説がこれ

[

{

"Name": "gender_based_evolutionary_architecture",

"Title": "Gender-based Evolutionary Architecture Search: Efficiency vs. Performance Prediction in MNIST",

"Experiment": "Implement a gender-based genetic algorithm for CNN architecture optimization. 'Male' architectures compete for early learning efficiency, while 'female' architectures evolve to predict final performance accurately. Each 'male' individual encodes CNN hyperparameters (layers, filters, etc.) and is evaluated on learning speed in initial epochs. 'Female' individuals develop evaluation functions to predict which 'male' architectures will achieve the highest accuracy with the fewest parameters after full training. In each generation, top 'male' architectures (based on early efficiency) are paired with top 'female' predictors (based on prediction accuracy of final performance). Offspring inherit traits from both 'parents', creating new architecture candidates and prediction functions. This approach aims to balance rapid initial learning with long-term performance, potentially discovering architectures that are both quick to train and highly accurate on MNIST.",

"Interestingness": 9,

"Feasibility": 6,

"Novelty": 8

},

{

"Name": "evolutionary_cnn_architecture",

"Title": "Evolutionary CNN Architecture Search: Optimal Network Structure for MNIST",

"Experiment": "Use genetic algorithms to optimize the CNN architecture. Each individual encodes hyperparameters such as number of layers, filter sizes, and channel counts. In each generation, select architectures with the highest accuracy, apply crossover and mutation to generate a new generation. Finally, obtain a CNN architecture optimized for the MNIST dataset.",

"Interestingness": 8,

"Feasibility": 7,

"Novelty": 6

},

{

"Name": "neuroevolution_mnist",

"Title": "NeuroEvolution: Evolutionary Weight Optimization for MNIST",

"Experiment": "Use the NEAT (NeuroEvolution of Augmenting Topologies) algorithm to evolve neural networks suitable for MNIST classification. Start with simple initial networks and evolutionarily add nodes and connections, adjusting weights. In each generation, evaluate networks based on classification accuracy on the MNIST dataset, select the highest-performing individuals to generate the next generation. Finally, obtain an optimal network structure balancing complexity and accuracy.",

"Interestingness": 9,

"Feasibility": 5,

"Novelty": 8

},

{

"Name": "evolving_activation_functions",

"Title": "Evolving Custom Activation Functions for MNIST Classification",

"Experiment": "Develop a genetic programming approach to evolve custom activation functions for each layer of a neural network. Start with a base set of mathematical operations (e.g., +, -, *, /, exp, log) and constants. Use these to create tree-based representations of activation functions. Apply genetic operators to evolve these functions over generations, evaluating their performance on MNIST classification. The fitness function considers both accuracy and computational efficiency. This approach aims to discover novel activation functions that may outperform standard ones like ReLU or sigmoid for MNIST-style tasks.",

"Interestingness": 9,

"Feasibility": 5,

"Novelty": 9

},

{

"Name": "modular_network_evolution",

"Title": "Evolving Modular Neural Network Structures for MNIST",

"Experiment": "Create a genetic algorithm that evolves modular neural network structures. Each individual in the population represents a network composed of interconnected modules. Modules can be convolutional layers, pooling layers, fully connected layers, or even more complex structures like residual blocks. The genetic algorithm evolves both the types of modules used and their connections. Evaluate networks on MNIST classification, considering both accuracy and inference speed. This approach aims to discover novel, potentially non-linear network structures that may better capture the hierarchical nature of handwritten digit recognition.",

"Interestingness": 8,

"Feasibility": 6,

"Novelty": 8

},

{

"Name": "adaptive_network_plasticity",

"Title": "Evolving Adaptive Network Plasticity for Continuous MNIST Learning",

"Experiment": "Develop a genetic algorithm to evolve neural networks with adaptive plasticity rules. Each network starts with a basic structure for MNIST classification, but the plasticity rules determining how weights change during training are subject to evolution. These rules could include Hebbian-like learning, homeostatic plasticity, or novel forms of weight updates. Evaluate networks on their ability to learn MNIST quickly and their capacity to adapt to slight variations in the dataset without forgetting. This approach aims to create networks that can continually adapt to new data without explicit retraining, potentially leading to more robust MNIST classifiers.",

"Interestingness": 9,

"Feasibility": 4,

"Novelty": 9

}

]

僕が最近気になっている「オス型AI」と「メス型AI(評価関数)」のアイデアが取り込まれている。普通はこんなアイデアは寝言もいいところなのでたとえ学生に研究してくれと頼んでも説得するほうに時間がかかるが、AI科学者はそんなつまらないことは言わないので無言で研究を開始する。

ちなみにこのseed_ideas.jsonから生まれたideas.jsonはこんな感じになった。

[

{

"Name": "gender_based_evolutionary_architecture",

"Title": "Gender-based Evolutionary Architecture Search: Efficiency vs. Performance Prediction in MNIST",

"Experiment": "Implement a gender-based genetic algorithm for CNN architecture optimization. 'Male' architectures compete for early learning efficiency, while 'female' architectures evolve to predict final performance accurately. Each 'male' individual encodes CNN hyperparameters (layers, filters, etc.) and is evaluated on learning speed in initial epochs. 'Female' individuals develop evaluation functions to predict which 'male' architectures will achieve the highest accuracy with the fewest parameters after full training. In each generation, top 'male' architectures (based on early efficiency) are paired with top 'female' predictors (based on prediction accuracy of final performance). Offspring inherit traits from both 'parents', creating new architecture candidates and prediction functions. This approach aims to balance rapid initial learning with long-term performance, potentially discovering architectures that are both quick to train and highly accurate on MNIST.",

"Interestingness": 9,

"Feasibility": 6,

"Novelty": 8,

"novel": true

},

{

"Name": "evolutionary_cnn_architecture",

"Title": "Evolutionary CNN Architecture Search: Optimal Network Structure for MNIST",

"Experiment": "Use genetic algorithms to optimize the CNN architecture. Each individual encodes hyperparameters such as number of layers, filter sizes, and channel counts. In each generation, select architectures with the highest accuracy, apply crossover and mutation to generate a new generation. Finally, obtain a CNN architecture optimized for the MNIST dataset.",

"Interestingness": 8,

"Feasibility": 7,

"Novelty": 6,

"novel": false

},

{

"Name": "neuroevolution_mnist",

"Title": "NeuroEvolution: Evolutionary Weight Optimization for MNIST",

"Experiment": "Use the NEAT (NeuroEvolution of Augmenting Topologies) algorithm to evolve neural networks suitable for MNIST classification. Start with simple initial networks and evolutionarily add nodes and connections, adjusting weights. In each generation, evaluate networks based on classification accuracy on the MNIST dataset, select the highest-performing individuals to generate the next generation. Finally, obtain an optimal network structure balancing complexity and accuracy.",

"Interestingness": 9,

"Feasibility": 5,

"Novelty": 8,

"novel": false

},

{

"Name": "evolving_activation_functions",

"Title": "Evolving Custom Activation Functions for MNIST Classification",

"Experiment": "Develop a genetic programming approach to evolve custom activation functions for each layer of a neural network. Start with a base set of mathematical operations (e.g., +, -, *, /, exp, log) and constants. Use these to create tree-based representations of activation functions. Apply genetic operators to evolve these functions over generations, evaluating their performance on MNIST classification. The fitness function considers both accuracy and computational efficiency. This approach aims to discover novel activation functions that may outperform standard ones like ReLU or sigmoid for MNIST-style tasks.",

"Interestingness": 9,

"Feasibility": 5,

"Novelty": 9,

"novel": false

},

{

"Name": "modular_network_evolution",

"Title": "Evolving Modular Neural Network Structures for MNIST",

"Experiment": "Create a genetic algorithm that evolves modular neural network structures. Each individual in the population represents a network composed of interconnected modules. Modules can be convolutional layers, pooling layers, fully connected layers, or even more complex structures like residual blocks. The genetic algorithm evolves both the types of modules used and their connections. Evaluate networks on MNIST classification, considering both accuracy and inference speed. This approach aims to discover novel, potentially non-linear network structures that may better capture the hierarchical nature of handwritten digit recognition.",

"Interestingness": 8,

"Feasibility": 6,

"Novelty": 8,

"novel": true

},

{

"Name": "adaptive_network_plasticity",

"Title": "Evolving Adaptive Network Plasticity for Continuous MNIST Learning",

"Experiment": "Develop a genetic algorithm to evolve neural networks with adaptive plasticity rules. Each network starts with a basic structure for MNIST classification, but the plasticity rules determining how weights change during training are subject to evolution. These rules could include Hebbian-like learning, homeostatic plasticity, or novel forms of weight updates. Evaluate networks on their ability to learn MNIST quickly and their capacity to adapt to slight variations in the dataset without forgetting. This approach aims to create networks that can continually adapt to new data without explicit retraining, potentially leading to more robust MNIST classifiers.",

"Interestingness": 9,

"Feasibility": 4,

"Novelty": 9,

"novel": true

},

{

"Name": "evolutionary_training_optimization",

"Title": "Evolutionary Training Optimization: Dynamic Hyperparameter Scheduling for Efficient MNIST Training",

"Experiment": "Implement an evolutionary algorithm to optimize hyperparameters dynamically during training. Extend the train function to support variable hyperparameters such as learning rate, dropout rates, and batch size. Initialize a population of hyperparameter sets. Use tournament selection to choose the best-performing sets. Apply one-point crossover to generate new sets and introduce mutations by making small random changes to hyperparameters. Evaluate the performance of each hyperparameter set based on training speed and final model accuracy. The top-performing hyperparameter sets are used to generate new sets for the next generation. Continue this process for a predefined number of generations or until convergence. Compare the evolved hyperparameter schedules against static schedules on the MNIST dataset.",

"Interestingness": 8,

"Feasibility": 7,

"Novelty": 8,

"novel": true

},

{

"Name": "evolving_weight_initialization",

"Title": "Evolving Weight Initialization for Efficient and Accurate Neural Network Training",

"Experiment": "Implement a genetic algorithm to evolve optimal weight initialization distributions. Extend the train function to support variable initialization distributions. Initialize a population of initialization parameters (e.g., mean and standard deviation for Gaussian initializations) for each layer. Use tournament selection to choose the best-performing initializations based on early training performance (e.g., first few epochs). Apply crossover and mutation to generate new initialization parameters. Evaluate the performance of each set based on training speed and initial accuracy. The top-performing initializations are used to generate new sets for the next generation. Continue this process for a predefined number of generations or until convergence. Compare the evolved initializations against standard methods like Xavier or He on the MNIST dataset.",

"Interestingness": 9,

"Feasibility": 7,

"Novelty": 8,

"novel": true

},

{

"Name": "evolving_lr_schedules",

"Title": "Evolving Learning Rate Schedules for Efficient and Accurate Neural Network Training",

"Experiment": "Implement a genetic algorithm to evolve optimal learning rate schedules. Extend the train function to support variable learning rate schedules. Represent each schedule using basis functions (e.g., linear decay, exponential decay, cyclic patterns) with parameters to evolve. Initialize a population of learning rate schedules by sampling parameters for these basis functions. Use tournament selection to choose the best-performing schedules based on training speed and final model accuracy. Apply crossover and mutation to generate new schedules. Evaluate the performance of each schedule on the MNIST dataset. Continue this process for a predefined number of generations or until convergence. Compare the evolved schedules against standard schedules like step decay or exponential decay.",

"Interestingness": 9,

"Feasibility": 7,

"Novelty": 8,

"novel": true

},

{

"Name": "lateral_inhibition_cnn",

"Title": "Incorporating Lateral Inhibition in CNNs for Enhanced MNIST Classification",

"Experiment": "Modify the CNN architecture to include lateral inhibition mechanisms within convolutional layers. Define a lateral inhibition function that scales down the activations of neighboring neurons based on a predefined kernel. Integrate this function into the forward pass of the convolutional layers. Train the modified network on the FashionMNIST dataset and compare its performance (accuracy, convergence speed) to the baseline model provided in the code. Experiment with different lateral inhibition kernels to find the optimal configuration and analyze the effect of different lateral inhibition strengths on the network's performance.",

"Interestingness": 9,

"Feasibility": 7,

"Novelty": 9,

"novel": false

},

{

"Name": "self_supervised_pretraining",

"Title": "Self-Supervised Pretraining for Enhanced CNN Performance on FashionMNIST",

"Experiment": "1. Implement a function to create a pretext task dataset with images rotated by random angles (e.g., 0, 90, 180, 270 degrees). 2. Extend the `Net` class to include a pretraining method that predicts the rotation angle. 3. Modify the `train` function to include a pretraining phase using the pretext task dataset. 4. Pretrain the model on the pretext task dataset for a few epochs. 5. Fine-tune the pre-trained model on the original FashionMNIST classification task. 6. Compare the performance of the pre-trained model with the baseline model in terms of accuracy and learning efficiency. 7. Evaluate both models using standard metrics (e.g., validation loss, accuracy) and report the results.",

"Interestingness": 9,

"Feasibility": 7,

"Novelty": 9,

"novel": true

},

{

"Name": "meta_learning_maml",

"Title": "Meta-Learning with MAML: Rapid Adaptation for FashionMNIST Classification",

"Experiment": "1. Implement a simplified MAML-based training loop. 2. Modify the `train` function to include a meta-training phase and a meta-testing phase. 3. In the meta-training phase, sample a fixed number of tasks (e.g., 5 tasks) from the FashionMNIST dataset, each being a small subset of the data. 4. For each task, perform a few gradient descent steps and compute the loss. 5. Aggregate these losses and compute the meta-gradient to update the initialization parameters. 6. In the meta-testing phase, fine-tune the model on the full FashionMNIST training set starting from the meta-learned initialization. 7. Compare the performance of the meta-learned model with the baseline model in terms of accuracy, convergence speed, and robustness to new tasks. 8. Evaluate using standard metrics and report the results.",

"Interestingness": 9,

"Feasibility": 7,

"Novelty": 9,

"novel": true

}

]さて、本当に面白い論文は出てくるのだろうか。

とりあえず続報を待ってほしい。