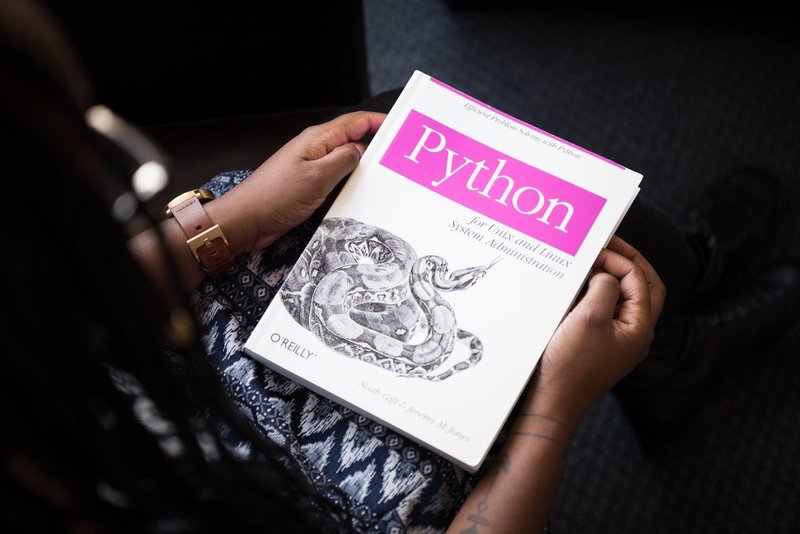

Beginner's guide to Web Scraping with Python lxml

Here is a piece of content aiming at beginners Who want to learn Web scraping with Python lxml library.What is lxml?

lxml is a reference to the XML toolkit in a python way which is internally being bound with two specific libraries of C language, libxml2 lxml is the most feature-rich and easy-to-use library for processing XML and HTML in Python programming language. lxml is unique in a way that it combines the speed and XML feature completeness of the libraries with the simplicity of a native Python

API.with the continued growth of both Python and XML, there are a plethora of packages out there that Help you read, generate, and modify XML files from Python scripts.The python lxml package has two big advantages:

Performance: Reading and writing even fairly large XML files take the almost imperceptible amount of time.

Element tree: This is used to create and parse tree structure Eyase of programming: python lxml library has easy syntax and more adaptive nature than other packages.Lxml

is similar in many ways to two other early packages

XML nodes.

There is a C-language version called cElementTree which may be even faster than lxml for some applications.However

, lxml is preferred by most of the python developers because of it xml .In particular, it supports XPath, which makes it easy to manage more complex XML structures.This

library can be used to get information from web services and web resources, as these are implemented in

XML.How to install lxml? / HTML format.The objective of this tutorial is to throw light on how lxml helps us to get and process information from different web resources.

Below is the command which is necessary to be fired to install it on your system.pip install lxml

pip automatically installs all dependencies depends on installing python lxml as The system may use a system package using binary installers depending upon system OS.I would prefer to install it using the former method, as many systems do not have a better way to install this package if the latter is

used.How to use lxml?

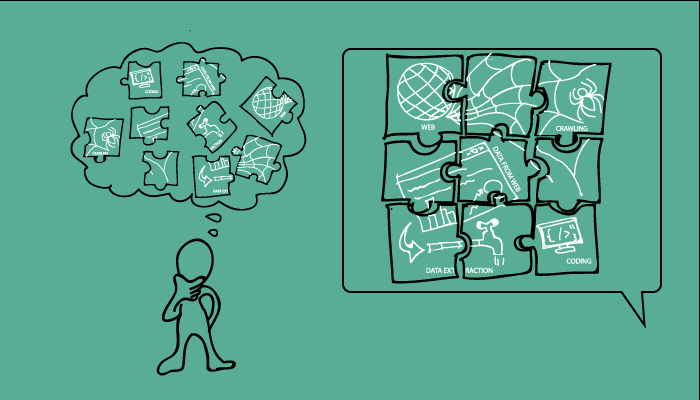

Getting a clear picture of the function of library is practical.Will take a closer implementation to create an idea of the idea of what is the library doing.As a matter of practical implementation of Web scraping with Python taking time and practice .

As Discussed Earlier, We Can Use Python Lxml To Create As Well As Parse XML / HTML Structures.

In A First And Very Basic So, Let Us Begin!

Lxml Has Many Modules And One Of The Modules Is A Etree Which Is Responsible For Creating elements, structure using these elements.example, let's create an html web page using python lxml and define some elements and its attributes.

I generally prefer to use I python command shell to execute python programs because it gives an extensive and clear command prompt to use python features in a very broad way.

After importing etree module, we can use Element class API to create multiple elements.In general, elements can be called as nodes as well.

To create a parent-child relationship using python lxml, we can use SubElement method of etree modules.XML / HTML pages designed on parent-child paradigm where elements can play the role of parents.

Element elements have multiple properties.For example, a text property can be used to set a text value for a node which can not be inferred as information for the end user.We

can set attributes for elements.can see below, I have created an html tree structure using lxml and its etree which can be saved as an html web page.

Now, Let'S Take Another Example In Which We Shall See How To Parse Html Tree Structure. This Process Is A Part Of Scraping Content From Web So You Can Follow This Process If You Want To Scrap Data From The Web And Process The Data Further.

In requests, module has improved speed and readability when compared to the built-in urllib2 module.So, using the requests module is a better choice.requests, html module is made use of lxml, to parse the response of the request.First

, let's import require modules.

HTTP web server sends the response as a Response <200> object.We store this in a page variable and then use html module to parse it and Response objects have multiple properties like response headers, contents, cookies, etc.We can use python dir () method to see all these object properties.Here, I am using page.html.fromstring implicitly expects bytes as input where the page.text provides content in simple text format (ASCII or utf-8, depending upon web server configuration).

In this example, we will focus on the

former.XPath is a way of locating information in structured documents such as HTML or XML.XML files containing a whole HTML file in a nice tree structure which we can go over two different ways: XPath and CSSSelect.The nodes are selected by following a path or steps.

Themost useful path expressions are listed below:

Descriptions Select all nodes with the name “nodename” The root node Selects nodes in the document from the current node that matches the selection no matter where they are the current node Selects the parent of the current node Selects attributes

/

//

.

..

@

Expression

nodename

Following are some path expressions and their results:

Results Select all nodes with the name “bookstore” Selects the root element bookstore Note: If the path starts with a slash (/) it always represents an absolute path to an element! Selects all book elements that are no matter where they are in the document Selects all book elements that are descendant of the bookstore element, no matter where they are under the bookstore element

Selects all attributes that are named lang

/ bookstore

bookstore / book

// book

bookstore // book

// @ lang

Path Expression

Bookstore

Aluminium Enclosure

Lets Get Back To Our Scraping Example.So Far We Have Downloaded And Made A Tree Structure From Html Web Page.We Are Using XPath To Select Nodes From This Tree Structure.As, We Want To Get Top Stories, We Have To Upon analysis we can see that h3 tag with data-analytic attribute contains this information.complete news.

We Have It Saved In Memory As Lists. Now We Can Do All Sorts Of Cool Stuff With It! Analyze It Using Python Or Save It In A File And Share It With Ta Da! The World.

We Have Covered Most Of The Stuff Related ToWeb Scraping With Python Lxml Module And Also Understood How Can We Combine It With Other Python Modules To Do Some Impressive Work. Below Are Few References Which Can Be Helpful In Knowing More About It.

Do Write a web scraper on your own and share your experience with us.

shop front

References

lxml – XML and HTML with Python

lxml.etree Tutorial

Parsing XML and HTML using lxml

Originally published article-https: //blog.datahut.co/beginners-guide-to-web-scraping-with-python-lxml/

この記事が気に入ったらサポートをしてみませんか?