ML 千本ノック: Keras の InceptionV3 による画像分類を Fine-tuning する(STL10)

この連載では、機械学習フレームワークのサンプルコードを毎回1つずつピックアップして実行していきます。

その過程で得られたノウハウや考え方について、簡潔にまとめていきます。

今回のお題は「Keras の InceptionV3 による画像分類を Fine-tuning する」です。

データセットとして、ImageNet のサブセットである STL10 のラベル付きデータセットを使います。

STL10 は教師なし学習に使うためのデータセットのようですが、今回は CIFAR10 の高画質版として採用しました。

入力は 96x96 のカラー画像です。

トレーニングデータは 5000枚 のラベル付きカラー画像で、クラス数は 10 です。

前回に続いて、今回もトレーニング済みの著名なモデルを利用します。

この記事では、以下ページの "Fine-tune InceptionV3 on a new set of classes" をベースとしてリファクタリングおよび加筆した後のコードを紹介しています。

https://keras.io/api/applications/

Preparation & Preprocessing

import tensorflow as tf

from tensorflow.keras.applications.inception_v3 import InceptionV3, preprocess_input, decode_predictions

from tensorflow.keras import layers, models, optimizers

from tensorflow.keras.preprocessing import image

from torchvision import datasets

trainset = datasets.STL10(".", split="train", download=True)

testset = datasets.STL10(".", split="test", download=True)

input_shape = (96, 96, 3)

n_classes = len(trainset.classes)

preprocess_x = lambda x: preprocess_input(tf.cast(tf.transpose(x.data, perm=[0, 2, 3, 1]), "float32"))

x_train, x_test = map(preprocess_x, [trainset, testset])

preprocess_y = lambda y: tf.one_hot(y.labels, n_classes)

y_train, y_test = map(preprocess_y, [trainset, testset])注目すべきポイント:

・ torchvision 由来の datasets を採用しているため、Keras で使うには適切なデータ変換が必要

→ デフォルトでは画像データが NCHW なので、preprocess_x() で NHWC に変換している

→ デフォルトでは画像データの型が uint8 なので、preprocess_x() で float32 に変換している

・ inception_v3.preprocess_input() はバッチを扱える

Modeling

base_model = InceptionV3(input_tensor=layers.Input(shape=input_shape), weights="imagenet", include_top=False)

x = base_model.output

x = layers.GlobalAveragePooling2D()(x)

x = layers.Dense(1024, activation="relu")(x)

predictions = layers.Dense(n_classes, activation="softmax")(x)

model = models.Model(inputs=base_model.input, outputs=predictions)注目すべきポイント:

・ InceptionV3 のインスタンス引数 weights="imagenet" で、トレーニング済みのウエイトをロードしている

・ InceptionV3 のインスタンス引数 include_top=False で、出力側 FCN をオミットしている

・ Dense レイヤー手前に GlobalAveragePooling2D レイヤーを挿入している

→ 前段 mixed10 (Concatenate) の出力が (None, 1, 1, 2048) なので、これに合わせたためか?(推測)

・ Dense(n_classes) レイヤー手前に Dense(1024) レイヤーを挿入している

→ クラス数が 10 と極端に少ないためか?(推測)

Training

base_model.trainable = False

model.compile(optimizer="rmsprop", loss="categorical_crossentropy", metrics=["categorical_accuracy"])

# model.summary()

model.fit(x_train, y_train, validation_split=0.1, epochs=10, batch_size=32)注目すべきポイント:

・ InceptionV3 の CNN 部分をトレーニング対象から一旦外すことで、巨大なモデルのトレーニングが軽量化されている

→ ウエイト総数 23,911,210 のうち、トレーニング対象外は 21,802,784 で約 91% 減

・ base_model.trainable プロパティーの変更は、base_model に含まれる全レイヤーに反映される

→ レイヤー個別にプロパティーの変更をしなくてよい

Training Log - First

Epoch 1/10

141/141 [==============================] - 67s 477ms/step - loss: 2.6808 - categorical_accuracy: 0.5704 - val_loss: 0.9581 - val_categorical_accuracy: 0.6740

Epoch 2/10

141/141 [==============================] - 67s 476ms/step - loss: 0.7867 - categorical_accuracy: 0.7502 - val_loss: 0.9999 - val_categorical_accuracy: 0.6960

Epoch 3/10

141/141 [==============================] - 67s 476ms/step - loss: 0.5548 - categorical_accuracy: 0.8251 - val_loss: 2.0996 - val_categorical_accuracy: 0.5560

Epoch 4/10

141/141 [==============================] - 66s 470ms/step - loss: 0.4241 - categorical_accuracy: 0.8620 - val_loss: 0.9725 - val_categorical_accuracy: 0.7360

Epoch 5/10

141/141 [==============================] - 66s 470ms/step - loss: 0.2879 - categorical_accuracy: 0.9073 - val_loss: 1.0802 - val_categorical_accuracy: 0.7400

Epoch 6/10

141/141 [==============================] - 67s 472ms/step - loss: 0.2149 - categorical_accuracy: 0.9329 - val_loss: 1.8768 - val_categorical_accuracy: 0.6820

Epoch 7/10

141/141 [==============================] - 66s 468ms/step - loss: 0.1680 - categorical_accuracy: 0.9480 - val_loss: 1.8489 - val_categorical_accuracy: 0.7140

Epoch 8/10

141/141 [==============================] - 66s 468ms/step - loss: 0.1513 - categorical_accuracy: 0.9571 - val_loss: 1.6035 - val_categorical_accuracy: 0.7340

Epoch 9/10

141/141 [==============================] - 66s 469ms/step - loss: 0.1245 - categorical_accuracy: 0.9702 - val_loss: 2.2195 - val_categorical_accuracy: 0.6760

Epoch 10/10

141/141 [==============================] - 66s 469ms/step - loss: 0.1118 - categorical_accuracy: 0.9720 - val_loss: 1.9437 - val_categorical_accuracy: 0.7280

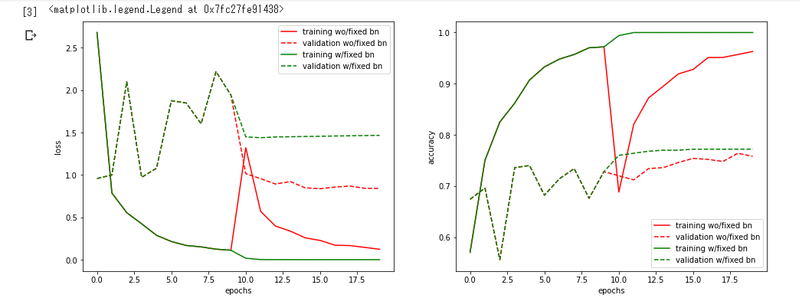

<tensorflow.python.keras.callbacks.History at 0x7f981011b5f8>・ training loss は順調に減少しているが validation loss は不安定に推移しており、overfitting 気味に見える

→ hyper-parameter チューニング未実施なので当然といえば当然

→ 10 epoch 後の training accuracy は 97.2%

→ 10 epoch 後の validation accuracy は 72.8%

Evaluating

model.evaluate(x_test, y_test)250/250 [==============================] - 103s 413ms/step - loss: 1.7357 - categorical_accuracy: 0.7437

[1.7356685400009155, 0.7437499761581421]注目すべきポイント:

・ test accuracy 74.4% は validation accuracy と比較しても悪くない精度だが、やはり hyper-parameter チューニングと data augmentation の余地がありそう

Fine-tuning

for i, layer in enumerate(model.layers):

layer.trainable = (not isinstance(layer, layers.BatchNormalization)) and (i > 248)

model.compile(optimizer=optimizers.SGD(lr=0.0001, momentum=0.9), loss="categorical_crossentropy", metrics=["categorical_accuracy"])

model.fit(x_train, y_train, validation_split=0.1, epochs=10, batch_size=32)注目すべきポイント:

・ 後段の Inception Block 2つ分(=249 番目のレイヤー以降)を trainable に変更している

・ 下記情報をたよりに、BatchNormalization はトレーニング対象から外した

→ https://keras.io/examples/vision/image_classification_efficientnet_fine_tuning/

→ 加えて、RMSprop は momentum の影響を受けすぎるので避けるべし、との言及あり

・ learning rate は 0.0001 と低め

・ optimizers.SGD を使っており、初回とは別物となっている

Training Log - Second

Epoch 1/10

141/141 [==============================] - 145s 1s/step - loss: 0.0177 - categorical_accuracy: 0.9940 - val_loss: 1.4493 - val_categorical_accuracy: 0.7600

Epoch 2/10

141/141 [==============================] - 145s 1s/step - loss: 0.0019 - categorical_accuracy: 1.0000 - val_loss: 1.4395 - val_categorical_accuracy: 0.7640

Epoch 3/10

141/141 [==============================] - 144s 1s/step - loss: 0.0010 - categorical_accuracy: 1.0000 - val_loss: 1.4487 - val_categorical_accuracy: 0.7680

Epoch 4/10

141/141 [==============================] - 144s 1s/step - loss: 8.2221e-04 - categorical_accuracy: 1.0000 - val_loss: 1.4510 - val_categorical_accuracy: 0.7700

Epoch 5/10

141/141 [==============================] - 146s 1s/step - loss: 7.1671e-04 - categorical_accuracy: 1.0000 - val_loss: 1.4541 - val_categorical_accuracy: 0.7700

Epoch 6/10

141/141 [==============================] - 145s 1s/step - loss: 6.4131e-04 - categorical_accuracy: 1.0000 - val_loss: 1.4568 - val_categorical_accuracy: 0.7720

Epoch 7/10

141/141 [==============================] - 145s 1s/step - loss: 5.8390e-04 - categorical_accuracy: 1.0000 - val_loss: 1.4598 - val_categorical_accuracy: 0.7720

Epoch 8/10

141/141 [==============================] - 145s 1s/step - loss: 5.3714e-04 - categorical_accuracy: 1.0000 - val_loss: 1.4626 - val_categorical_accuracy: 0.7720

Epoch 9/10

141/141 [==============================] - 145s 1s/step - loss: 4.9910e-04 - categorical_accuracy: 1.0000 - val_loss: 1.4653 - val_categorical_accuracy: 0.7720

Epoch 10/10

141/141 [==============================] - 144s 1s/step - loss: 4.6693e-04 - categorical_accuracy: 1.0000 - val_loss: 1.4676 - val_categorical_accuracy: 0.7720

<tensorflow.python.keras.callbacks.History at 0x7f980bb905f8>注目すべきポイント:

・ training loss は順調に減少しているが、3 epoch ほどで底打ちになり、training accuracy も 100% となっている

・ validation loss は安定に推移しているが、3 epoch ほどで改善が見られなくなった

→ 10 epoch 後の validation accuracy は 77.2%

・ 別途 BatchNormalization を含めてトレーニングしてみた際には、1 epoch 目で大きな accuracy の減退が生じた(下図赤字箇所)

再び Evaluating

250/250 [==============================] - 103s 410ms/step - loss: 1.3592 - categorical_accuracy: 0.7759

[1.3592036962509155, 0.7758749723434448]注目すべきポイント:

・ test accuracy 77.6% となり初回に比べて 3.2 ポイント改善した

→ 悪くない精度だが、Fine-tuning の成果としてはやや物足りないものとなっている

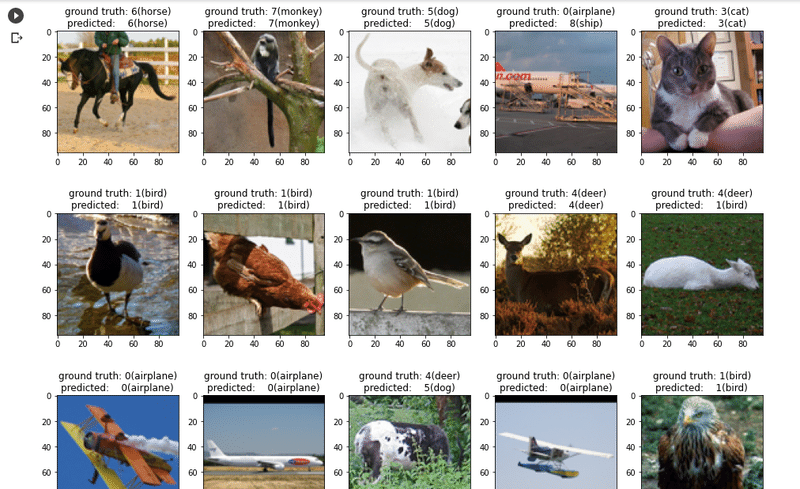

Appendix: Inference

import matplotlib.pyplot as plt

%matplotlib inline

plt.rcParams["figure.figsize"] = (16, 12)

for _ in range(15):

plt.subplot(3, 5, 1 + _)

y_pred = model.predict(tf.expand_dims(x_test[_], 0))

plt.imshow(image.array_to_img(x_test[_]))

plt.title("ground truth: {}({})\npredicted: {}({})".format(

tf.argmax(y_test[_]), trainset.classes[tf.argmax(y_test[_])], y_pred.argmax(), trainset.classes[y_pred.argmax()]

))

Appendix: Model Summary

※ Fine-tuning 前のものです

※ すごい長いです

Model: "functional_1"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 96, 96, 3)] 0

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 47, 47, 32) 864 input_1[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 47, 47, 32) 96 conv2d[0][0]

__________________________________________________________________________________________________

activation (Activation) (None, 47, 47, 32) 0 batch_normalization[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 45, 45, 32) 9216 activation[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 45, 45, 32) 96 conv2d_1[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 45, 45, 32) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 45, 45, 64) 18432 activation_1[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 45, 45, 64) 192 conv2d_2[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 45, 45, 64) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 22, 22, 64) 0 activation_2[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 22, 22, 80) 5120 max_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 22, 22, 80) 240 conv2d_3[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 22, 22, 80) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 20, 20, 192) 138240 activation_3[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 20, 20, 192) 576 conv2d_4[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 20, 20, 192) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 9, 9, 192) 0 activation_4[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 9, 9, 64) 12288 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 9, 9, 64) 192 conv2d_8[0][0]

__________________________________________________________________________________________________

activation_8 (Activation) (None, 9, 9, 64) 0 batch_normalization_8[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 9, 9, 48) 9216 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 9, 9, 96) 55296 activation_8[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 9, 9, 48) 144 conv2d_6[0][0]

__________________________________________________________________________________________________

batch_normalization_9 (BatchNor (None, 9, 9, 96) 288 conv2d_9[0][0]

__________________________________________________________________________________________________

activation_6 (Activation) (None, 9, 9, 48) 0 batch_normalization_6[0][0]

__________________________________________________________________________________________________

activation_9 (Activation) (None, 9, 9, 96) 0 batch_normalization_9[0][0]

__________________________________________________________________________________________________

average_pooling2d (AveragePooli (None, 9, 9, 192) 0 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 9, 9, 64) 12288 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 9, 9, 64) 76800 activation_6[0][0]

__________________________________________________________________________________________________

conv2d_10 (Conv2D) (None, 9, 9, 96) 82944 activation_9[0][0]

__________________________________________________________________________________________________

conv2d_11 (Conv2D) (None, 9, 9, 32) 6144 average_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 9, 9, 64) 192 conv2d_5[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 9, 9, 64) 192 conv2d_7[0][0]

__________________________________________________________________________________________________

batch_normalization_10 (BatchNo (None, 9, 9, 96) 288 conv2d_10[0][0]

__________________________________________________________________________________________________

batch_normalization_11 (BatchNo (None, 9, 9, 32) 96 conv2d_11[0][0]

__________________________________________________________________________________________________

activation_5 (Activation) (None, 9, 9, 64) 0 batch_normalization_5[0][0]

__________________________________________________________________________________________________

activation_7 (Activation) (None, 9, 9, 64) 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

activation_10 (Activation) (None, 9, 9, 96) 0 batch_normalization_10[0][0]

__________________________________________________________________________________________________

activation_11 (Activation) (None, 9, 9, 32) 0 batch_normalization_11[0][0]

__________________________________________________________________________________________________

mixed0 (Concatenate) (None, 9, 9, 256) 0 activation_5[0][0]

activation_7[0][0]

activation_10[0][0]

activation_11[0][0]

__________________________________________________________________________________________________

conv2d_15 (Conv2D) (None, 9, 9, 64) 16384 mixed0[0][0]

__________________________________________________________________________________________________

batch_normalization_15 (BatchNo (None, 9, 9, 64) 192 conv2d_15[0][0]

__________________________________________________________________________________________________

activation_15 (Activation) (None, 9, 9, 64) 0 batch_normalization_15[0][0]

__________________________________________________________________________________________________

conv2d_13 (Conv2D) (None, 9, 9, 48) 12288 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_16 (Conv2D) (None, 9, 9, 96) 55296 activation_15[0][0]

__________________________________________________________________________________________________

batch_normalization_13 (BatchNo (None, 9, 9, 48) 144 conv2d_13[0][0]

__________________________________________________________________________________________________

batch_normalization_16 (BatchNo (None, 9, 9, 96) 288 conv2d_16[0][0]

__________________________________________________________________________________________________

activation_13 (Activation) (None, 9, 9, 48) 0 batch_normalization_13[0][0]

__________________________________________________________________________________________________

activation_16 (Activation) (None, 9, 9, 96) 0 batch_normalization_16[0][0]

__________________________________________________________________________________________________

average_pooling2d_1 (AveragePoo (None, 9, 9, 256) 0 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_12 (Conv2D) (None, 9, 9, 64) 16384 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_14 (Conv2D) (None, 9, 9, 64) 76800 activation_13[0][0]

__________________________________________________________________________________________________

conv2d_17 (Conv2D) (None, 9, 9, 96) 82944 activation_16[0][0]

__________________________________________________________________________________________________

conv2d_18 (Conv2D) (None, 9, 9, 64) 16384 average_pooling2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_12 (BatchNo (None, 9, 9, 64) 192 conv2d_12[0][0]

__________________________________________________________________________________________________

batch_normalization_14 (BatchNo (None, 9, 9, 64) 192 conv2d_14[0][0]

__________________________________________________________________________________________________

batch_normalization_17 (BatchNo (None, 9, 9, 96) 288 conv2d_17[0][0]

__________________________________________________________________________________________________

batch_normalization_18 (BatchNo (None, 9, 9, 64) 192 conv2d_18[0][0]

__________________________________________________________________________________________________

activation_12 (Activation) (None, 9, 9, 64) 0 batch_normalization_12[0][0]

__________________________________________________________________________________________________

activation_14 (Activation) (None, 9, 9, 64) 0 batch_normalization_14[0][0]

__________________________________________________________________________________________________

activation_17 (Activation) (None, 9, 9, 96) 0 batch_normalization_17[0][0]

__________________________________________________________________________________________________

activation_18 (Activation) (None, 9, 9, 64) 0 batch_normalization_18[0][0]

__________________________________________________________________________________________________

mixed1 (Concatenate) (None, 9, 9, 288) 0 activation_12[0][0]

activation_14[0][0]

activation_17[0][0]

activation_18[0][0]

__________________________________________________________________________________________________

conv2d_22 (Conv2D) (None, 9, 9, 64) 18432 mixed1[0][0]

__________________________________________________________________________________________________

batch_normalization_22 (BatchNo (None, 9, 9, 64) 192 conv2d_22[0][0]

__________________________________________________________________________________________________

activation_22 (Activation) (None, 9, 9, 64) 0 batch_normalization_22[0][0]

__________________________________________________________________________________________________

conv2d_20 (Conv2D) (None, 9, 9, 48) 13824 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_23 (Conv2D) (None, 9, 9, 96) 55296 activation_22[0][0]

__________________________________________________________________________________________________

batch_normalization_20 (BatchNo (None, 9, 9, 48) 144 conv2d_20[0][0]

__________________________________________________________________________________________________

batch_normalization_23 (BatchNo (None, 9, 9, 96) 288 conv2d_23[0][0]

__________________________________________________________________________________________________

activation_20 (Activation) (None, 9, 9, 48) 0 batch_normalization_20[0][0]

__________________________________________________________________________________________________

activation_23 (Activation) (None, 9, 9, 96) 0 batch_normalization_23[0][0]

__________________________________________________________________________________________________

average_pooling2d_2 (AveragePoo (None, 9, 9, 288) 0 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_19 (Conv2D) (None, 9, 9, 64) 18432 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_21 (Conv2D) (None, 9, 9, 64) 76800 activation_20[0][0]

__________________________________________________________________________________________________

conv2d_24 (Conv2D) (None, 9, 9, 96) 82944 activation_23[0][0]

__________________________________________________________________________________________________

conv2d_25 (Conv2D) (None, 9, 9, 64) 18432 average_pooling2d_2[0][0]

__________________________________________________________________________________________________

batch_normalization_19 (BatchNo (None, 9, 9, 64) 192 conv2d_19[0][0]

__________________________________________________________________________________________________

batch_normalization_21 (BatchNo (None, 9, 9, 64) 192 conv2d_21[0][0]

__________________________________________________________________________________________________

batch_normalization_24 (BatchNo (None, 9, 9, 96) 288 conv2d_24[0][0]

__________________________________________________________________________________________________

batch_normalization_25 (BatchNo (None, 9, 9, 64) 192 conv2d_25[0][0]

__________________________________________________________________________________________________

activation_19 (Activation) (None, 9, 9, 64) 0 batch_normalization_19[0][0]

__________________________________________________________________________________________________

activation_21 (Activation) (None, 9, 9, 64) 0 batch_normalization_21[0][0]

__________________________________________________________________________________________________

activation_24 (Activation) (None, 9, 9, 96) 0 batch_normalization_24[0][0]

__________________________________________________________________________________________________

activation_25 (Activation) (None, 9, 9, 64) 0 batch_normalization_25[0][0]

__________________________________________________________________________________________________

mixed2 (Concatenate) (None, 9, 9, 288) 0 activation_19[0][0]

activation_21[0][0]

activation_24[0][0]

activation_25[0][0]

__________________________________________________________________________________________________

conv2d_27 (Conv2D) (None, 9, 9, 64) 18432 mixed2[0][0]

__________________________________________________________________________________________________

batch_normalization_27 (BatchNo (None, 9, 9, 64) 192 conv2d_27[0][0]

__________________________________________________________________________________________________

activation_27 (Activation) (None, 9, 9, 64) 0 batch_normalization_27[0][0]

__________________________________________________________________________________________________

conv2d_28 (Conv2D) (None, 9, 9, 96) 55296 activation_27[0][0]

__________________________________________________________________________________________________

batch_normalization_28 (BatchNo (None, 9, 9, 96) 288 conv2d_28[0][0]

__________________________________________________________________________________________________

activation_28 (Activation) (None, 9, 9, 96) 0 batch_normalization_28[0][0]

__________________________________________________________________________________________________

conv2d_26 (Conv2D) (None, 4, 4, 384) 995328 mixed2[0][0]

__________________________________________________________________________________________________

conv2d_29 (Conv2D) (None, 4, 4, 96) 82944 activation_28[0][0]

__________________________________________________________________________________________________

batch_normalization_26 (BatchNo (None, 4, 4, 384) 1152 conv2d_26[0][0]

__________________________________________________________________________________________________

batch_normalization_29 (BatchNo (None, 4, 4, 96) 288 conv2d_29[0][0]

__________________________________________________________________________________________________

activation_26 (Activation) (None, 4, 4, 384) 0 batch_normalization_26[0][0]

__________________________________________________________________________________________________

activation_29 (Activation) (None, 4, 4, 96) 0 batch_normalization_29[0][0]

__________________________________________________________________________________________________

max_pooling2d_2 (MaxPooling2D) (None, 4, 4, 288) 0 mixed2[0][0]

__________________________________________________________________________________________________

mixed3 (Concatenate) (None, 4, 4, 768) 0 activation_26[0][0]

activation_29[0][0]

max_pooling2d_2[0][0]

__________________________________________________________________________________________________

conv2d_34 (Conv2D) (None, 4, 4, 128) 98304 mixed3[0][0]

__________________________________________________________________________________________________

batch_normalization_34 (BatchNo (None, 4, 4, 128) 384 conv2d_34[0][0]

__________________________________________________________________________________________________

activation_34 (Activation) (None, 4, 4, 128) 0 batch_normalization_34[0][0]

__________________________________________________________________________________________________

conv2d_35 (Conv2D) (None, 4, 4, 128) 114688 activation_34[0][0]

__________________________________________________________________________________________________

batch_normalization_35 (BatchNo (None, 4, 4, 128) 384 conv2d_35[0][0]

__________________________________________________________________________________________________

activation_35 (Activation) (None, 4, 4, 128) 0 batch_normalization_35[0][0]

__________________________________________________________________________________________________

conv2d_31 (Conv2D) (None, 4, 4, 128) 98304 mixed3[0][0]

__________________________________________________________________________________________________

conv2d_36 (Conv2D) (None, 4, 4, 128) 114688 activation_35[0][0]

__________________________________________________________________________________________________

batch_normalization_31 (BatchNo (None, 4, 4, 128) 384 conv2d_31[0][0]

__________________________________________________________________________________________________

batch_normalization_36 (BatchNo (None, 4, 4, 128) 384 conv2d_36[0][0]

__________________________________________________________________________________________________

activation_31 (Activation) (None, 4, 4, 128) 0 batch_normalization_31[0][0]

__________________________________________________________________________________________________

activation_36 (Activation) (None, 4, 4, 128) 0 batch_normalization_36[0][0]

__________________________________________________________________________________________________

conv2d_32 (Conv2D) (None, 4, 4, 128) 114688 activation_31[0][0]

__________________________________________________________________________________________________

conv2d_37 (Conv2D) (None, 4, 4, 128) 114688 activation_36[0][0]

__________________________________________________________________________________________________

batch_normalization_32 (BatchNo (None, 4, 4, 128) 384 conv2d_32[0][0]

__________________________________________________________________________________________________

batch_normalization_37 (BatchNo (None, 4, 4, 128) 384 conv2d_37[0][0]

__________________________________________________________________________________________________

activation_32 (Activation) (None, 4, 4, 128) 0 batch_normalization_32[0][0]

__________________________________________________________________________________________________

activation_37 (Activation) (None, 4, 4, 128) 0 batch_normalization_37[0][0]

__________________________________________________________________________________________________

average_pooling2d_3 (AveragePoo (None, 4, 4, 768) 0 mixed3[0][0]

__________________________________________________________________________________________________

conv2d_30 (Conv2D) (None, 4, 4, 192) 147456 mixed3[0][0]

__________________________________________________________________________________________________

conv2d_33 (Conv2D) (None, 4, 4, 192) 172032 activation_32[0][0]

__________________________________________________________________________________________________

conv2d_38 (Conv2D) (None, 4, 4, 192) 172032 activation_37[0][0]

__________________________________________________________________________________________________

conv2d_39 (Conv2D) (None, 4, 4, 192) 147456 average_pooling2d_3[0][0]

__________________________________________________________________________________________________

batch_normalization_30 (BatchNo (None, 4, 4, 192) 576 conv2d_30[0][0]

__________________________________________________________________________________________________

batch_normalization_33 (BatchNo (None, 4, 4, 192) 576 conv2d_33[0][0]

__________________________________________________________________________________________________

batch_normalization_38 (BatchNo (None, 4, 4, 192) 576 conv2d_38[0][0]

__________________________________________________________________________________________________

batch_normalization_39 (BatchNo (None, 4, 4, 192) 576 conv2d_39[0][0]

__________________________________________________________________________________________________

activation_30 (Activation) (None, 4, 4, 192) 0 batch_normalization_30[0][0]

__________________________________________________________________________________________________

activation_33 (Activation) (None, 4, 4, 192) 0 batch_normalization_33[0][0]

__________________________________________________________________________________________________

activation_38 (Activation) (None, 4, 4, 192) 0 batch_normalization_38[0][0]

__________________________________________________________________________________________________

activation_39 (Activation) (None, 4, 4, 192) 0 batch_normalization_39[0][0]

__________________________________________________________________________________________________

mixed4 (Concatenate) (None, 4, 4, 768) 0 activation_30[0][0]

activation_33[0][0]

activation_38[0][0]

activation_39[0][0]

__________________________________________________________________________________________________

conv2d_44 (Conv2D) (None, 4, 4, 160) 122880 mixed4[0][0]

__________________________________________________________________________________________________

batch_normalization_44 (BatchNo (None, 4, 4, 160) 480 conv2d_44[0][0]

__________________________________________________________________________________________________

activation_44 (Activation) (None, 4, 4, 160) 0 batch_normalization_44[0][0]

__________________________________________________________________________________________________

conv2d_45 (Conv2D) (None, 4, 4, 160) 179200 activation_44[0][0]

__________________________________________________________________________________________________

batch_normalization_45 (BatchNo (None, 4, 4, 160) 480 conv2d_45[0][0]

__________________________________________________________________________________________________

activation_45 (Activation) (None, 4, 4, 160) 0 batch_normalization_45[0][0]

__________________________________________________________________________________________________

conv2d_41 (Conv2D) (None, 4, 4, 160) 122880 mixed4[0][0]

__________________________________________________________________________________________________

conv2d_46 (Conv2D) (None, 4, 4, 160) 179200 activation_45[0][0]

__________________________________________________________________________________________________

batch_normalization_41 (BatchNo (None, 4, 4, 160) 480 conv2d_41[0][0]

__________________________________________________________________________________________________

batch_normalization_46 (BatchNo (None, 4, 4, 160) 480 conv2d_46[0][0]

__________________________________________________________________________________________________

activation_41 (Activation) (None, 4, 4, 160) 0 batch_normalization_41[0][0]

__________________________________________________________________________________________________

activation_46 (Activation) (None, 4, 4, 160) 0 batch_normalization_46[0][0]

__________________________________________________________________________________________________

conv2d_42 (Conv2D) (None, 4, 4, 160) 179200 activation_41[0][0]

__________________________________________________________________________________________________

conv2d_47 (Conv2D) (None, 4, 4, 160) 179200 activation_46[0][0]

__________________________________________________________________________________________________

batch_normalization_42 (BatchNo (None, 4, 4, 160) 480 conv2d_42[0][0]

__________________________________________________________________________________________________

batch_normalization_47 (BatchNo (None, 4, 4, 160) 480 conv2d_47[0][0]

__________________________________________________________________________________________________

activation_42 (Activation) (None, 4, 4, 160) 0 batch_normalization_42[0][0]

__________________________________________________________________________________________________

activation_47 (Activation) (None, 4, 4, 160) 0 batch_normalization_47[0][0]

__________________________________________________________________________________________________

average_pooling2d_4 (AveragePoo (None, 4, 4, 768) 0 mixed4[0][0]

__________________________________________________________________________________________________

conv2d_40 (Conv2D) (None, 4, 4, 192) 147456 mixed4[0][0]

__________________________________________________________________________________________________

conv2d_43 (Conv2D) (None, 4, 4, 192) 215040 activation_42[0][0]

__________________________________________________________________________________________________

conv2d_48 (Conv2D) (None, 4, 4, 192) 215040 activation_47[0][0]

__________________________________________________________________________________________________

conv2d_49 (Conv2D) (None, 4, 4, 192) 147456 average_pooling2d_4[0][0]

__________________________________________________________________________________________________

batch_normalization_40 (BatchNo (None, 4, 4, 192) 576 conv2d_40[0][0]

__________________________________________________________________________________________________

batch_normalization_43 (BatchNo (None, 4, 4, 192) 576 conv2d_43[0][0]

__________________________________________________________________________________________________

batch_normalization_48 (BatchNo (None, 4, 4, 192) 576 conv2d_48[0][0]

__________________________________________________________________________________________________

batch_normalization_49 (BatchNo (None, 4, 4, 192) 576 conv2d_49[0][0]

__________________________________________________________________________________________________

activation_40 (Activation) (None, 4, 4, 192) 0 batch_normalization_40[0][0]

__________________________________________________________________________________________________

activation_43 (Activation) (None, 4, 4, 192) 0 batch_normalization_43[0][0]

__________________________________________________________________________________________________

activation_48 (Activation) (None, 4, 4, 192) 0 batch_normalization_48[0][0]

__________________________________________________________________________________________________

activation_49 (Activation) (None, 4, 4, 192) 0 batch_normalization_49[0][0]

__________________________________________________________________________________________________

mixed5 (Concatenate) (None, 4, 4, 768) 0 activation_40[0][0]

activation_43[0][0]

activation_48[0][0]

activation_49[0][0]

__________________________________________________________________________________________________

conv2d_54 (Conv2D) (None, 4, 4, 160) 122880 mixed5[0][0]

__________________________________________________________________________________________________

batch_normalization_54 (BatchNo (None, 4, 4, 160) 480 conv2d_54[0][0]

__________________________________________________________________________________________________

activation_54 (Activation) (None, 4, 4, 160) 0 batch_normalization_54[0][0]

__________________________________________________________________________________________________

conv2d_55 (Conv2D) (None, 4, 4, 160) 179200 activation_54[0][0]

__________________________________________________________________________________________________

batch_normalization_55 (BatchNo (None, 4, 4, 160) 480 conv2d_55[0][0]

__________________________________________________________________________________________________

activation_55 (Activation) (None, 4, 4, 160) 0 batch_normalization_55[0][0]

__________________________________________________________________________________________________

conv2d_51 (Conv2D) (None, 4, 4, 160) 122880 mixed5[0][0]

__________________________________________________________________________________________________

conv2d_56 (Conv2D) (None, 4, 4, 160) 179200 activation_55[0][0]

__________________________________________________________________________________________________

batch_normalization_51 (BatchNo (None, 4, 4, 160) 480 conv2d_51[0][0]

__________________________________________________________________________________________________

batch_normalization_56 (BatchNo (None, 4, 4, 160) 480 conv2d_56[0][0]

__________________________________________________________________________________________________

activation_51 (Activation) (None, 4, 4, 160) 0 batch_normalization_51[0][0]

__________________________________________________________________________________________________

activation_56 (Activation) (None, 4, 4, 160) 0 batch_normalization_56[0][0]

__________________________________________________________________________________________________

conv2d_52 (Conv2D) (None, 4, 4, 160) 179200 activation_51[0][0]

__________________________________________________________________________________________________

conv2d_57 (Conv2D) (None, 4, 4, 160) 179200 activation_56[0][0]

__________________________________________________________________________________________________

batch_normalization_52 (BatchNo (None, 4, 4, 160) 480 conv2d_52[0][0]

__________________________________________________________________________________________________

batch_normalization_57 (BatchNo (None, 4, 4, 160) 480 conv2d_57[0][0]

__________________________________________________________________________________________________

activation_52 (Activation) (None, 4, 4, 160) 0 batch_normalization_52[0][0]

__________________________________________________________________________________________________

activation_57 (Activation) (None, 4, 4, 160) 0 batch_normalization_57[0][0]

__________________________________________________________________________________________________

average_pooling2d_5 (AveragePoo (None, 4, 4, 768) 0 mixed5[0][0]

__________________________________________________________________________________________________

conv2d_50 (Conv2D) (None, 4, 4, 192) 147456 mixed5[0][0]

__________________________________________________________________________________________________

conv2d_53 (Conv2D) (None, 4, 4, 192) 215040 activation_52[0][0]

__________________________________________________________________________________________________

conv2d_58 (Conv2D) (None, 4, 4, 192) 215040 activation_57[0][0]

__________________________________________________________________________________________________

conv2d_59 (Conv2D) (None, 4, 4, 192) 147456 average_pooling2d_5[0][0]

__________________________________________________________________________________________________

batch_normalization_50 (BatchNo (None, 4, 4, 192) 576 conv2d_50[0][0]

__________________________________________________________________________________________________

batch_normalization_53 (BatchNo (None, 4, 4, 192) 576 conv2d_53[0][0]

__________________________________________________________________________________________________

batch_normalization_58 (BatchNo (None, 4, 4, 192) 576 conv2d_58[0][0]

__________________________________________________________________________________________________

batch_normalization_59 (BatchNo (None, 4, 4, 192) 576 conv2d_59[0][0]

__________________________________________________________________________________________________

activation_50 (Activation) (None, 4, 4, 192) 0 batch_normalization_50[0][0]

__________________________________________________________________________________________________

activation_53 (Activation) (None, 4, 4, 192) 0 batch_normalization_53[0][0]

__________________________________________________________________________________________________

activation_58 (Activation) (None, 4, 4, 192) 0 batch_normalization_58[0][0]

__________________________________________________________________________________________________

activation_59 (Activation) (None, 4, 4, 192) 0 batch_normalization_59[0][0]

__________________________________________________________________________________________________

mixed6 (Concatenate) (None, 4, 4, 768) 0 activation_50[0][0]

activation_53[0][0]

activation_58[0][0]

activation_59[0][0]

__________________________________________________________________________________________________

conv2d_64 (Conv2D) (None, 4, 4, 192) 147456 mixed6[0][0]

__________________________________________________________________________________________________

batch_normalization_64 (BatchNo (None, 4, 4, 192) 576 conv2d_64[0][0]

__________________________________________________________________________________________________

activation_64 (Activation) (None, 4, 4, 192) 0 batch_normalization_64[0][0]

__________________________________________________________________________________________________

conv2d_65 (Conv2D) (None, 4, 4, 192) 258048 activation_64[0][0]

__________________________________________________________________________________________________

batch_normalization_65 (BatchNo (None, 4, 4, 192) 576 conv2d_65[0][0]

__________________________________________________________________________________________________

activation_65 (Activation) (None, 4, 4, 192) 0 batch_normalization_65[0][0]

__________________________________________________________________________________________________

conv2d_61 (Conv2D) (None, 4, 4, 192) 147456 mixed6[0][0]

__________________________________________________________________________________________________

conv2d_66 (Conv2D) (None, 4, 4, 192) 258048 activation_65[0][0]

__________________________________________________________________________________________________

batch_normalization_61 (BatchNo (None, 4, 4, 192) 576 conv2d_61[0][0]

__________________________________________________________________________________________________

batch_normalization_66 (BatchNo (None, 4, 4, 192) 576 conv2d_66[0][0]

__________________________________________________________________________________________________

activation_61 (Activation) (None, 4, 4, 192) 0 batch_normalization_61[0][0]

__________________________________________________________________________________________________

activation_66 (Activation) (None, 4, 4, 192) 0 batch_normalization_66[0][0]

__________________________________________________________________________________________________

conv2d_62 (Conv2D) (None, 4, 4, 192) 258048 activation_61[0][0]

__________________________________________________________________________________________________

conv2d_67 (Conv2D) (None, 4, 4, 192) 258048 activation_66[0][0]

__________________________________________________________________________________________________

batch_normalization_62 (BatchNo (None, 4, 4, 192) 576 conv2d_62[0][0]

__________________________________________________________________________________________________

batch_normalization_67 (BatchNo (None, 4, 4, 192) 576 conv2d_67[0][0]

__________________________________________________________________________________________________

activation_62 (Activation) (None, 4, 4, 192) 0 batch_normalization_62[0][0]

__________________________________________________________________________________________________

activation_67 (Activation) (None, 4, 4, 192) 0 batch_normalization_67[0][0]

__________________________________________________________________________________________________

average_pooling2d_6 (AveragePoo (None, 4, 4, 768) 0 mixed6[0][0]

__________________________________________________________________________________________________

conv2d_60 (Conv2D) (None, 4, 4, 192) 147456 mixed6[0][0]

__________________________________________________________________________________________________

conv2d_63 (Conv2D) (None, 4, 4, 192) 258048 activation_62[0][0]

__________________________________________________________________________________________________

conv2d_68 (Conv2D) (None, 4, 4, 192) 258048 activation_67[0][0]

__________________________________________________________________________________________________

conv2d_69 (Conv2D) (None, 4, 4, 192) 147456 average_pooling2d_6[0][0]

__________________________________________________________________________________________________

batch_normalization_60 (BatchNo (None, 4, 4, 192) 576 conv2d_60[0][0]

__________________________________________________________________________________________________

batch_normalization_63 (BatchNo (None, 4, 4, 192) 576 conv2d_63[0][0]

__________________________________________________________________________________________________

batch_normalization_68 (BatchNo (None, 4, 4, 192) 576 conv2d_68[0][0]

__________________________________________________________________________________________________

batch_normalization_69 (BatchNo (None, 4, 4, 192) 576 conv2d_69[0][0]

__________________________________________________________________________________________________

activation_60 (Activation) (None, 4, 4, 192) 0 batch_normalization_60[0][0]

__________________________________________________________________________________________________

activation_63 (Activation) (None, 4, 4, 192) 0 batch_normalization_63[0][0]

__________________________________________________________________________________________________

activation_68 (Activation) (None, 4, 4, 192) 0 batch_normalization_68[0][0]

__________________________________________________________________________________________________

activation_69 (Activation) (None, 4, 4, 192) 0 batch_normalization_69[0][0]

__________________________________________________________________________________________________

mixed7 (Concatenate) (None, 4, 4, 768) 0 activation_60[0][0]

activation_63[0][0]

activation_68[0][0]

activation_69[0][0]

__________________________________________________________________________________________________

conv2d_72 (Conv2D) (None, 4, 4, 192) 147456 mixed7[0][0]

__________________________________________________________________________________________________

batch_normalization_72 (BatchNo (None, 4, 4, 192) 576 conv2d_72[0][0]

__________________________________________________________________________________________________

activation_72 (Activation) (None, 4, 4, 192) 0 batch_normalization_72[0][0]

__________________________________________________________________________________________________

conv2d_73 (Conv2D) (None, 4, 4, 192) 258048 activation_72[0][0]

__________________________________________________________________________________________________

batch_normalization_73 (BatchNo (None, 4, 4, 192) 576 conv2d_73[0][0]

__________________________________________________________________________________________________

activation_73 (Activation) (None, 4, 4, 192) 0 batch_normalization_73[0][0]

__________________________________________________________________________________________________

conv2d_70 (Conv2D) (None, 4, 4, 192) 147456 mixed7[0][0]

__________________________________________________________________________________________________

conv2d_74 (Conv2D) (None, 4, 4, 192) 258048 activation_73[0][0]

__________________________________________________________________________________________________

batch_normalization_70 (BatchNo (None, 4, 4, 192) 576 conv2d_70[0][0]

__________________________________________________________________________________________________

batch_normalization_74 (BatchNo (None, 4, 4, 192) 576 conv2d_74[0][0]

__________________________________________________________________________________________________

activation_70 (Activation) (None, 4, 4, 192) 0 batch_normalization_70[0][0]

__________________________________________________________________________________________________

activation_74 (Activation) (None, 4, 4, 192) 0 batch_normalization_74[0][0]

__________________________________________________________________________________________________

conv2d_71 (Conv2D) (None, 1, 1, 320) 552960 activation_70[0][0]

__________________________________________________________________________________________________

conv2d_75 (Conv2D) (None, 1, 1, 192) 331776 activation_74[0][0]

__________________________________________________________________________________________________

batch_normalization_71 (BatchNo (None, 1, 1, 320) 960 conv2d_71[0][0]

__________________________________________________________________________________________________

batch_normalization_75 (BatchNo (None, 1, 1, 192) 576 conv2d_75[0][0]

__________________________________________________________________________________________________

activation_71 (Activation) (None, 1, 1, 320) 0 batch_normalization_71[0][0]

__________________________________________________________________________________________________

activation_75 (Activation) (None, 1, 1, 192) 0 batch_normalization_75[0][0]

__________________________________________________________________________________________________

max_pooling2d_3 (MaxPooling2D) (None, 1, 1, 768) 0 mixed7[0][0]

__________________________________________________________________________________________________

mixed8 (Concatenate) (None, 1, 1, 1280) 0 activation_71[0][0]

activation_75[0][0]

max_pooling2d_3[0][0]

__________________________________________________________________________________________________

conv2d_80 (Conv2D) (None, 1, 1, 448) 573440 mixed8[0][0]

__________________________________________________________________________________________________

batch_normalization_80 (BatchNo (None, 1, 1, 448) 1344 conv2d_80[0][0]

__________________________________________________________________________________________________

activation_80 (Activation) (None, 1, 1, 448) 0 batch_normalization_80[0][0]

__________________________________________________________________________________________________

conv2d_77 (Conv2D) (None, 1, 1, 384) 491520 mixed8[0][0]

__________________________________________________________________________________________________

conv2d_81 (Conv2D) (None, 1, 1, 384) 1548288 activation_80[0][0]

__________________________________________________________________________________________________

batch_normalization_77 (BatchNo (None, 1, 1, 384) 1152 conv2d_77[0][0]

__________________________________________________________________________________________________

batch_normalization_81 (BatchNo (None, 1, 1, 384) 1152 conv2d_81[0][0]

__________________________________________________________________________________________________

activation_77 (Activation) (None, 1, 1, 384) 0 batch_normalization_77[0][0]

__________________________________________________________________________________________________

activation_81 (Activation) (None, 1, 1, 384) 0 batch_normalization_81[0][0]

__________________________________________________________________________________________________

conv2d_78 (Conv2D) (None, 1, 1, 384) 442368 activation_77[0][0]

__________________________________________________________________________________________________

conv2d_79 (Conv2D) (None, 1, 1, 384) 442368 activation_77[0][0]

__________________________________________________________________________________________________

conv2d_82 (Conv2D) (None, 1, 1, 384) 442368 activation_81[0][0]

__________________________________________________________________________________________________

conv2d_83 (Conv2D) (None, 1, 1, 384) 442368 activation_81[0][0]

__________________________________________________________________________________________________

average_pooling2d_7 (AveragePoo (None, 1, 1, 1280) 0 mixed8[0][0]

__________________________________________________________________________________________________

conv2d_76 (Conv2D) (None, 1, 1, 320) 409600 mixed8[0][0]

__________________________________________________________________________________________________

batch_normalization_78 (BatchNo (None, 1, 1, 384) 1152 conv2d_78[0][0]

__________________________________________________________________________________________________

batch_normalization_79 (BatchNo (None, 1, 1, 384) 1152 conv2d_79[0][0]

__________________________________________________________________________________________________

batch_normalization_82 (BatchNo (None, 1, 1, 384) 1152 conv2d_82[0][0]

__________________________________________________________________________________________________

batch_normalization_83 (BatchNo (None, 1, 1, 384) 1152 conv2d_83[0][0]

__________________________________________________________________________________________________

conv2d_84 (Conv2D) (None, 1, 1, 192) 245760 average_pooling2d_7[0][0]

__________________________________________________________________________________________________

batch_normalization_76 (BatchNo (None, 1, 1, 320) 960 conv2d_76[0][0]

__________________________________________________________________________________________________

activation_78 (Activation) (None, 1, 1, 384) 0 batch_normalization_78[0][0]

__________________________________________________________________________________________________

activation_79 (Activation) (None, 1, 1, 384) 0 batch_normalization_79[0][0]

__________________________________________________________________________________________________

activation_82 (Activation) (None, 1, 1, 384) 0 batch_normalization_82[0][0]

__________________________________________________________________________________________________

activation_83 (Activation) (None, 1, 1, 384) 0 batch_normalization_83[0][0]

__________________________________________________________________________________________________

batch_normalization_84 (BatchNo (None, 1, 1, 192) 576 conv2d_84[0][0]

__________________________________________________________________________________________________

activation_76 (Activation) (None, 1, 1, 320) 0 batch_normalization_76[0][0]

__________________________________________________________________________________________________

mixed9_0 (Concatenate) (None, 1, 1, 768) 0 activation_78[0][0]

activation_79[0][0]

__________________________________________________________________________________________________

concatenate (Concatenate) (None, 1, 1, 768) 0 activation_82[0][0]

activation_83[0][0]

__________________________________________________________________________________________________

activation_84 (Activation) (None, 1, 1, 192) 0 batch_normalization_84[0][0]

__________________________________________________________________________________________________

mixed9 (Concatenate) (None, 1, 1, 2048) 0 activation_76[0][0]

mixed9_0[0][0]

concatenate[0][0]

activation_84[0][0]

__________________________________________________________________________________________________

conv2d_89 (Conv2D) (None, 1, 1, 448) 917504 mixed9[0][0]

__________________________________________________________________________________________________

batch_normalization_89 (BatchNo (None, 1, 1, 448) 1344 conv2d_89[0][0]

__________________________________________________________________________________________________

activation_89 (Activation) (None, 1, 1, 448) 0 batch_normalization_89[0][0]

__________________________________________________________________________________________________

conv2d_86 (Conv2D) (None, 1, 1, 384) 786432 mixed9[0][0]

__________________________________________________________________________________________________

conv2d_90 (Conv2D) (None, 1, 1, 384) 1548288 activation_89[0][0]

__________________________________________________________________________________________________

batch_normalization_86 (BatchNo (None, 1, 1, 384) 1152 conv2d_86[0][0]

__________________________________________________________________________________________________

batch_normalization_90 (BatchNo (None, 1, 1, 384) 1152 conv2d_90[0][0]

__________________________________________________________________________________________________

activation_86 (Activation) (None, 1, 1, 384) 0 batch_normalization_86[0][0]

__________________________________________________________________________________________________

activation_90 (Activation) (None, 1, 1, 384) 0 batch_normalization_90[0][0]

__________________________________________________________________________________________________

conv2d_87 (Conv2D) (None, 1, 1, 384) 442368 activation_86[0][0]

__________________________________________________________________________________________________

conv2d_88 (Conv2D) (None, 1, 1, 384) 442368 activation_86[0][0]

__________________________________________________________________________________________________

conv2d_91 (Conv2D) (None, 1, 1, 384) 442368 activation_90[0][0]

__________________________________________________________________________________________________

conv2d_92 (Conv2D) (None, 1, 1, 384) 442368 activation_90[0][0]

__________________________________________________________________________________________________

average_pooling2d_8 (AveragePoo (None, 1, 1, 2048) 0 mixed9[0][0]

__________________________________________________________________________________________________

conv2d_85 (Conv2D) (None, 1, 1, 320) 655360 mixed9[0][0]

__________________________________________________________________________________________________

batch_normalization_87 (BatchNo (None, 1, 1, 384) 1152 conv2d_87[0][0]

__________________________________________________________________________________________________

batch_normalization_88 (BatchNo (None, 1, 1, 384) 1152 conv2d_88[0][0]

__________________________________________________________________________________________________

batch_normalization_91 (BatchNo (None, 1, 1, 384) 1152 conv2d_91[0][0]

__________________________________________________________________________________________________

batch_normalization_92 (BatchNo (None, 1, 1, 384) 1152 conv2d_92[0][0]

__________________________________________________________________________________________________

conv2d_93 (Conv2D) (None, 1, 1, 192) 393216 average_pooling2d_8[0][0]

__________________________________________________________________________________________________

batch_normalization_85 (BatchNo (None, 1, 1, 320) 960 conv2d_85[0][0]

__________________________________________________________________________________________________

activation_87 (Activation) (None, 1, 1, 384) 0 batch_normalization_87[0][0]

__________________________________________________________________________________________________

activation_88 (Activation) (None, 1, 1, 384) 0 batch_normalization_88[0][0]

__________________________________________________________________________________________________

activation_91 (Activation) (None, 1, 1, 384) 0 batch_normalization_91[0][0]

__________________________________________________________________________________________________

activation_92 (Activation) (None, 1, 1, 384) 0 batch_normalization_92[0][0]

__________________________________________________________________________________________________

batch_normalization_93 (BatchNo (None, 1, 1, 192) 576 conv2d_93[0][0]

__________________________________________________________________________________________________

activation_85 (Activation) (None, 1, 1, 320) 0 batch_normalization_85[0][0]

__________________________________________________________________________________________________

mixed9_1 (Concatenate) (None, 1, 1, 768) 0 activation_87[0][0]

activation_88[0][0]

__________________________________________________________________________________________________

concatenate_1 (Concatenate) (None, 1, 1, 768) 0 activation_91[0][0]

activation_92[0][0]

__________________________________________________________________________________________________

activation_93 (Activation) (None, 1, 1, 192) 0 batch_normalization_93[0][0]

__________________________________________________________________________________________________

mixed10 (Concatenate) (None, 1, 1, 2048) 0 activation_85[0][0]

mixed9_1[0][0]

concatenate_1[0][0]

activation_93[0][0]

__________________________________________________________________________________________________

global_average_pooling2d (Globa (None, 2048) 0 mixed10[0][0]

__________________________________________________________________________________________________

dense (Dense) (None, 1024) 2098176 global_average_pooling2d[0][0]

__________________________________________________________________________________________________

dense_1 (Dense) (None, 10) 10250 dense[0][0]

==================================================================================================

Total params: 23,911,210

Trainable params: 2,108,426

Non-trainable params: 21,802,784

__________________________________________________________________________________________________この記事が気に入ったらサポートをしてみませんか?